Parameters in Photogrammetric Software for Small-Format Oblique

Camera System on Unmanned Aerial Vehicle

Bannakulpiphat, T.1 and Santitamnont, P.1,2,*

1Department of Survey Engineering, Chulalongkorn University,

Bangkok 10330, Thailand

2Center of Excellence in Infrastructure Management,

Chulalongkorn University, Bangkok, Thailand

*Corresponding Author

Abstract

Utilizing small-format oblique camera systems to capture

simultaneous nadir and oblique photographs from unmanned aerial

vehicles (UAVs) is a common practice in modern photogrammetry.

Oblique photographs provide enhanced geometric insights into

building side views, terrain morphology, and vegetation, thereby

enriching interpretation and classification. However, the design of

camera rig parameters and their precise mathematical modeling for

small-format oblique camera systems in multi-view processing is

essential to ensure accurate representation of the physical camera

geometry and results. This study investigates the camera rig

parameters of the ‘3DM-V3' small-format oblique camera system,

focusing specifically on the relative relationship between nadir

and oblique cameras, within two prominent photogrammetric software:

PIX4Dmapper and Agisoft Metashape. The research concludes that

optimal parameterization involves fully constrained relative

translation parameters (TX, TY, TZ)

rel for the four oblique cameras, while setting

approximate initial estimates as free constrained for relative

rotation parameters (RX, RY, RZ)rel.

This approach aligns with the physical geometry of the camera

system and yields a precise camera model, as confirmed through

bundle block adjustment (BBA) computations. PIX4Dmapper yields

horizontal and vertical root mean square errors (RMSE) of 0.023 m

and 0.019 m, respectively, while Agisoft Metashape results in RMSE

of 0.018 m and 0.046 m. These RMSE values, considering the ground

sample distance and ground control point accuracy, reflect the

robustness of the approach. The insights from this research offer

valuable guidance for industries, facilitating informed decisions

regarding the selection of appropriate software and parameters for

small-format oblique camera systems mounted on UAVs, thus ensuring

consistency between theoretical models and real-world applications.

Keywords: Camera Model, Camera Rig, Oblique Camera,

Oblique Photograph, UAV, Small-format Oblique Camera Systems

1. Introduction

Multi-head camera systems are widely used in modern photogrammetry for

capturing simultaneous nadir and oblique photographs from unmanned

aerial vehicles (UAVs) [1][2] and[3]. These systems provide oblique

photographs rich in geometric details, such as side views of buildings

and the vegetation canopy on the terrain. Such details significantly

enhance interpretation and classification compared to traditional nadir

photographs [4] and [5]. Multi-head camera systems improve the

information available for mapping and increase the efficiency of

mapping missions. They facilitate the acquisition of multi-view

photographs, leading to an extensive dataset for subsequent multi-view

geometry and point-cloud processing. This enhancement is crucial for

improved keypoint generation, which plays a vital role in automated

processing using photogrammetric computer vision software [6] and [7].

Historically, unmanned aerial vehicles (UAVs) were primarily developed

and used by the military for surveillance, reconnaissance, and other

tactical purposes [8] and [9]. Recently, however, UAVs have been

extensively adopted for civilian and commercial applications, ranging

from package delivery and agriculture to mapping and engineering

inspection.

Many of these applications necessitate the creation of 3D maps and

point clouds, which serve to reference the geographic location of

terrain and aid in design [10] and [11]. Photogrammetric mapping

software heavily relies on aerial photographs taken by a single camera

installed on a UAV. However, the accuracy of the resulting maps and

models is influenced by various factors, including the instrument used,

mission planning and execution methods, and software for image

processing [12]. Addressing these challenges, a notable development in

the industry is the emergence of manufacturers producing small-format

oblique camera systems. Designed for integration with off-the-shelf

UAVs for mapping and inspection purposes, these systems have garnered

interest due to their affordability and ease of operation. While UAVs

equipped with these cameras still operate under national air traffic

control, they offer a safer alternative and significantly more

cost-effective compared to manned aircraft equipped with large-format

oblique camera systems.

Researchers have explored the use of oblique camera systems with

multiple cameras due to the advantageous image orientation these

systems offer. Specifically, multi-camera systems possess superior ray

intersection geometry compared to nadir-only image blocks. However,

these systems face challenges stemming from factors such as varying

scale, occlusion, and atmospheric influences, which are difficult to

model and can complicate image matching and bundle adjustment tasks.

For instance, Gerke et al., [13] delved into the processing of oblique

airborne image sets, focusing on tie point matching across different

viewing directions, bundle block adjustment accuracy, the influence of

overlap on accuracy, and the distribution of control points and their

impact on point accuracy. Karel et al., [14] introduced a method to

significantly reduce the number of tie points, and consequently the

unknowns, before the bundle block adjustment, ensuring the preservation

of orientation and quality calibration for aerial blocks from

multi-camera platforms. Alsaidik et al., [15] showcased a hybrid

acquisition system tailored for UAV platforms, merging multi-view

cameras with LiDAR scanners to enhance data collection. Their research

underscores the synergies between LiDAR and photogrammetry, emphasizing

their combined potential to enhance data quality. They also highlight

the importance of these hybrid systems in efficiently generating 3D

geospatial information. While such systems can significantly increase

multi-view mapping information and image overlap, it is crucial to

account for the oblique effect introduced by angled cameras to the

structure of the camera system, particularly in terms of the angles and

distances of the cameras in a multi-head, small-format oblique camera

system.

The objective of this research is to examine the parameters of the

camera rig and their numerical modeling in the “3DM-V3”

small-format oblique camera systems. The aim is to understand the

impact of different photogrammetric software packages on processing

aerial photographs, especially the relative relationship between the

nadir camera and oblique cameras within these systems. To accomplish

this, mathematical models were created and compared using two

commonly used photogrammetric software packages: PIX4Dmapper

(version 4.7.5) and Agisoft Metashape (version 1.8.3). Both are

globally recognized in the photogrammetric computer vision field

and provide settings for the parameters and weighting of the camera

rig. Highlighting discrepancies in results based on the software

packages broadens understanding about UAVs equipped with oblique

camera systems and the application of Structure-from-Motion (SfM)

technologies. In this study, the same aerial photographs used in a

prior study by Bannakulpiphat et al., [16] were processed. The

conclusions from their research underlined the significance of

optimizing camera rig parameters for UAV-mounted small-format

oblique camera systems to ensure alignment with actual rig

geometries. This paper examines the variations in camera rig

parameters across the photogrammetric software and compares these

parameters with the real-world dimensions of the oblique camera

systems, ensuring consistent camera geometry. Furthermore, the

multi-view geometry techniques offered by both software packages

are used to compare the supplementary coordinates derived from 3D

measurements.

2.Materials and Methods

2.1 Data Acquisition

The study area for this research was the geodetic GNSS and UAV testing

field at the Center of Learning Network for Region (CLNR), supervised

by the Faculty of Engineering, Department of Surveying Engineering.

This field is located in the province of Saraburi, central Thailand

(Latitude: 14.523676°N, Longitude: 101.023542°E) covering an area of

approximately 0.8 km². A map of the area is shown in Figure 1(a). The

aerial photographs used for this research were captured using a

vertical take-off and landing (VTOL) aerial vehicle, named Loong 2160

VTOL, as depicted in Figure 1(b). This VTOL was equipped with a

"FOXTECH 3DM-V3" small-format oblique camera system, designed

specifically for mapping and surveying purposes, and is shown in Figure

1(c).

Figure 1: (a) Distribution of the GCPs (yellow

triangles); (b) Loong 2106 VTOL used for the study; and (c) the FOXTECH 3DM-V3 camera

Table 1: UAV and camera specifications

|

Specification

|

Detail

|

|

Model

|

Loong 2160 VTOL

|

|

Camera

|

FOXTECH 3DM-V3 Oblique Camera for Mapping and

Survey

|

|

Sensor (width x height, mm)

|

23.5 x 15.6

|

|

Image (width x height, pixels)

|

6000 x 4000

|

|

Focal length, mm

|

25.2 (Nadir camera) / 35.7 (Oblique camera)

|

|

Total pixel

|

120 megapixel (Each camera 24 megapixel)

|

|

Oblique Lens Angle, degree

|

45

|

The camera system consists of one nadir camera and four 45-degree

oblique cameras, each enclosed in a rig with its own SD card slot for

separate storage. The technical specifications of the UAV and camera

used in this study are summarized in Table 1.

The flight mission was conducted in a single-strip block format,

typical of conventional photogrammetric mapping. The flight route,

managed by ARDUPILOT software, maintained an average altitude of 150

meters above ground level, with an overlap of 80% and a side lap of

60%. The mission comprised 12 flight lines and captured a total of

2,995 photographs, averaging 599 photographs per camera. Ground control

points (GCPs) were measured on the same day using the real-time

kinematics (RTK) GNSS technique, achieving a horizontal accuracy of 2

cm and a vertical accuracy of 5 cm. A total of 14 GCPs were distributed

across the study area (see Figure 1a) and used to georeference the

photogrammetric block.

2.2 Mathematical Modeling of the Small-Format Oblique Camera

Systems

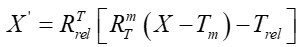

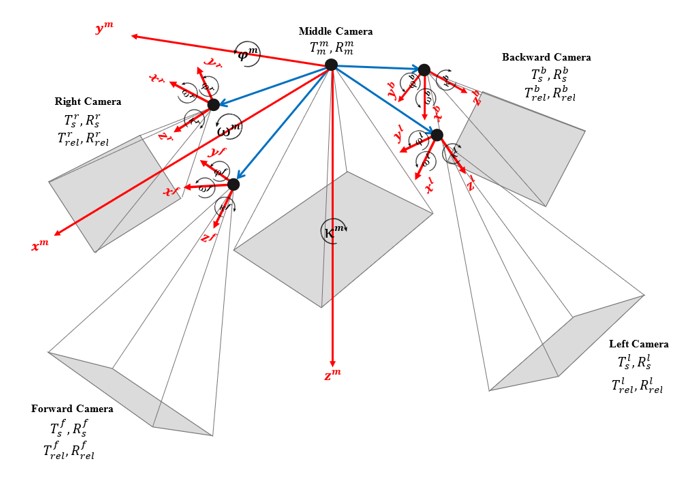

Small-format oblique camera systems consist of multiple cameras mounted

on a camera-rig structure, necessitating a mathematical model that

effectively links these different cameras.

To develop an accurate camera rig model, understanding the precise

relationship between each secondary camera and the reference camera on

the camera rig is essential. This relationship is defined by the

relative translation and rotation parameters, which describe the exact

geometric characteristics of the cameras' interrelationship. These rig

parameters are crucial for subsequent bundle block adjustment (BBA),

wherein the orientation parameters of these cameras are optimized

simultaneously to create a 3D model of the object or area of interest.

Thus, the mathematical modeling of a small-format oblique camera system

involves the following geometric relationship characteristics:

-

A single camera in a small-format oblique camera system is

typically designated as the reference camera and has a known

position (Tm) and orientation (Rm)

in the world coordinate system.

-

The other cameras are secondary cameras, each with its own

position (Ts) and orientation (Rs)

in the same world coordinate system.

-

Each secondary camera is defined relative to the reference

camera by known relative translation (Trel) and

rotation (Rrel).

The exterior orientation parameters of every camera in the multi-head

camera system, except for the reference camera, are computed using

specific equations. The reference camera serves as the benchmark for

calculating the positions and orientations of the secondary cameras, as

detailed in Equations (1) and (2):

Ts =Tm + RmTrel

Equation 1

Rs = RmRrel

Equation 2

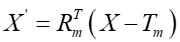

The positions of a three-dimensional point (X') in the camera

coordinate systems of the reference and the secondary camera can be

given by Equation (3) and Equation (4), respectively.

Equation 3

Equation 4

Where T represents the position of the camera projection center

in the world coordinate system, represents the rotation matrix

that defines the camera orientation, X represents a 3D point

in the world coordinate system, and X' represents a 3D point

in the camera coordinate system [17]. Understanding these geometric

relationships is fundamental for bundle block adjustment (BBA), where

the orientation parameters of the cameras are optimized simultaneously

to generate a 3D model of the target object or area. This mathematical

modeling of the small-format oblique camera system provides essential

insights into the precise geometric characteristics governing the

relationships between different cameras on the rig. As a result, it

enables more advanced tasks like 3D modeling and aerial mapping to

produce results consistent with the real world. Therefore, the relative

translation (Trel) and rotation (Rrel)

parameters are crucial in defining the modeling of the small-format

oblique camera system, ensuring both precision and real-world geometric

consistency. The small-format oblique camera systems utilized in this

research are illustrated in Figure 2 and further detailed upon in Table

2 and Table 3.

2.3 Photogrammetric Processing

The goal of photogrammetric processing in this study was to establish a

mathematical model for the rig parameters of a small-format oblique

camera system. The results were evaluated in terms of geometry and

uncertainty using two commercial UAV processing software applications,

namely PIX4Dmapper and Agisoft Metashape. These applications use

photogrammetric computer vision techniques to align photographs through

structure-from-motion, constrain block datum with ground control points

(GCPs), and simultaneously solve the bundle block adjustment (BBA) with

camera self-calibration by assigning proper weight to these

observations. The software calculates the relationship between the

photographic coordinates and the camera model, linking them to the

coordinates of the GCPs.

Additionally, the software can process aerial photographs and calculate

the relationship of the camera rig parameters, based on the relative

positions between the reference camera and the secondary cameras. When

processing nadir and oblique photographs, additional settings account

for the camera rig parameters to bridge the cameras. The camera rig

parameters, crucial for linking small-format oblique camera systems in

this study, facilitate the measurement of relative displacements among

cameras and their individual physical characteristics.

Figure 2: Exterior orientation parameters and the

mathematical model of small-format oblique camera systems

Table 2: Symbols for each camera parameter matrix in

the small-format oblique camera systems

Table 3: Mathematical relationships between the

reference camera and the secondary camera in the small-format oblique camera systems

However, the rotation parameters remain ambiguous, as the manufacturer

did not supply model values for the proprietary camera system.

Consequently, an initial approximate value is used, allowing the

software to fine-tune the outcome through a numerical method. In

modeling the small-format oblique camera system, both PIX4Dmapper and

Agisoft Metashape provide features to process aerial photography blocks

that incorporate multiple cameras. Each program allows for setting

parameters for every camera in the oblique camera system, such as

relative translation and relative rotation, along with weights for

camera-rig constraints. After processing, the program optimizes these

parameters based on numerical computation. In addition to the relative

translation and rotation parameters obtained from the data processing

software, the software can also autonomously handle the interior

orientation parameters of each camera in small-format oblique camera

system.

This means that within the data processing software, parameters can be

set, and the software employs a numerical method to deduce optimal

values. These include the focal length (f), the principal

point (cx, cy),the

radial lens distortion coefficients (R1, R2,

R3), and the tangential lens distortion coefficients (T1,

T2). This comprehensive process is commonly referred to as in-situ

calibration.

3. Results and Discussions

3.1 Parameterization and Numerical Modeling of Small-Format Oblique

Camera Systems

The research begins by processing aerial photographs from small-format

oblique camera systems with these systems being freely constrained by

both relative translation and rotation parameters during numeric

processing. However, the results of image processing revealed

inconsistencies between the exterior orientation parameters of the

oblique systems and the physical geometry of the camera systems. To

address this, it is necessary to determine the camera rig parameters

and perform numerical modeling for small-format oblique camera systems,

ensuring consistency with the physical geometry of the camera systems

and allowing for the setting of weight in terms of precision. Table 4

shows the constraints of the weight for the camera rig parameters used

in this research.

For the relative translation parameters, denoted as (Tx,

Ty, Tz)reln, where n

ranges from 1 to 4, all four sets of offset values for the oblique

cameras are defined as “fully constrained”. This is physically

consistent with the actual distance measurement from the reference

camera to the secondary camera. The relative rotation parameters,

denoted as

(Rx, Ry, Rz)reln

, where n ranges from 1 to 4, for each reference camera and oblique

cameras, are approximated as initial estimates and set as "free

constrained" during numerical processing. The final camera model,

resulting from the bundle block adjustment computation, confirms the

precise geometry and reveals consistent rig parameters as expected. The

results of the final numerical modeling are depicted in Table 5 for

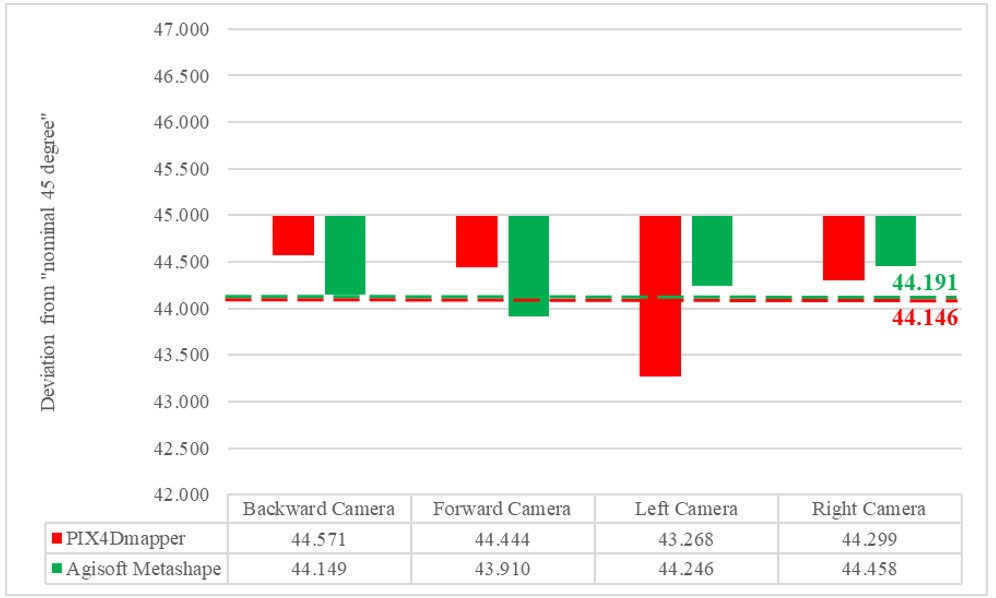

PIX4Dmapper and in Table 6 for Agisoft Metashape. Furthermore, Figure 3

shows the resulting rotations

(Rx, Ry, Rz)

in a bar graph, compared to the mean values of the four oblique

cameras.

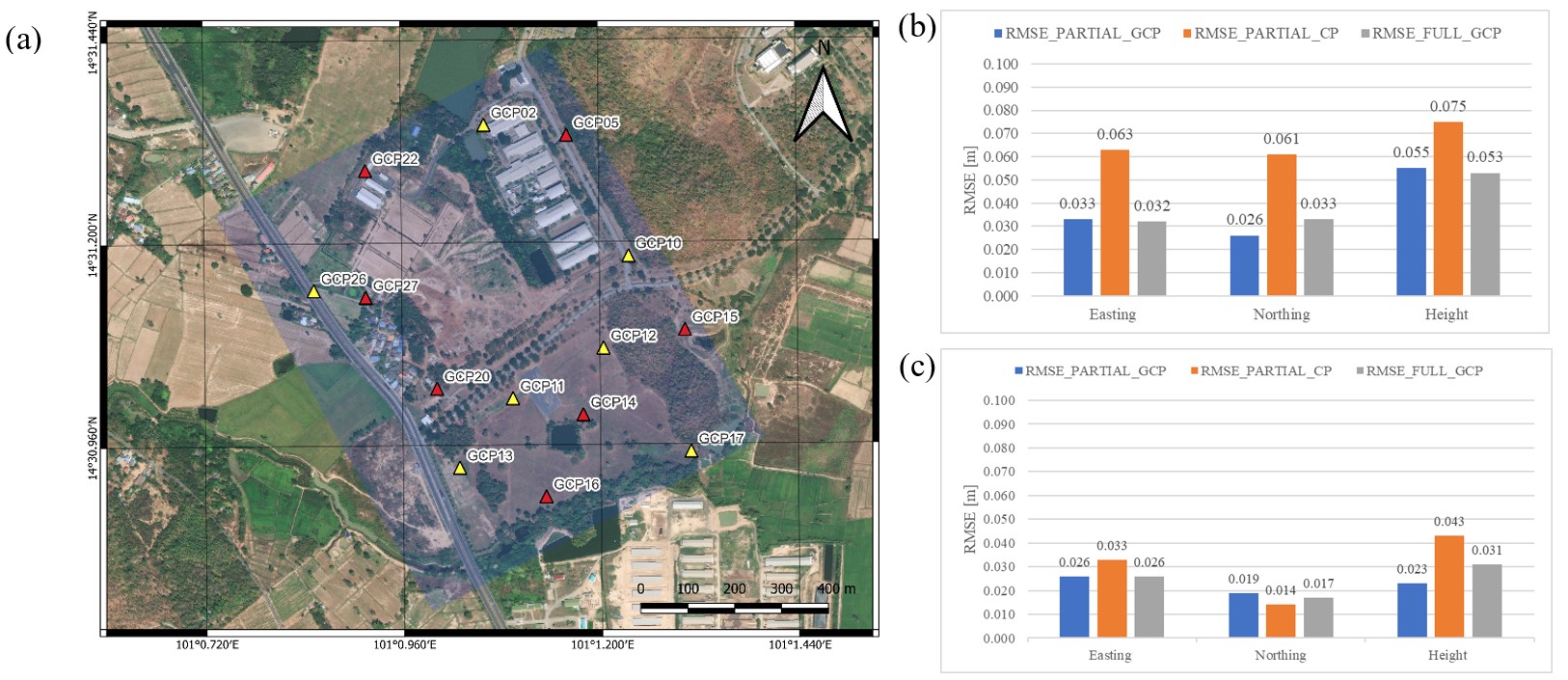

3.2 Quality Control for Bundle Block Adjustment Result

To ensure the precision of the photogrammetric results, an in-depth

examination of ground control points (GCPs) was undertaken before the

aerial triangulation process; this utilized a total of 14 GCPs. For

anomaly detection, the GCPs were divided into two groups: Group 1,

which included 7 partial GCPs, and Group 2, which contained 7 partial

checkpoints (CPs). The root mean square error (RMSE) values for the two

groups were analyzed along each axis, and no abnormal values were

detected (refer to Table 7 and Figure 4). Based on these findings, the

final aerial triangulation of the five-camera block was executed,

incorporating all 14 GCPs. The outcomes of this assessment, detailed in

Table 8, are integral to ensuring the accuracy and reliability of the

photogrammetric output.

3.3 Comparison of the Consistency of 3D Measurement from Two

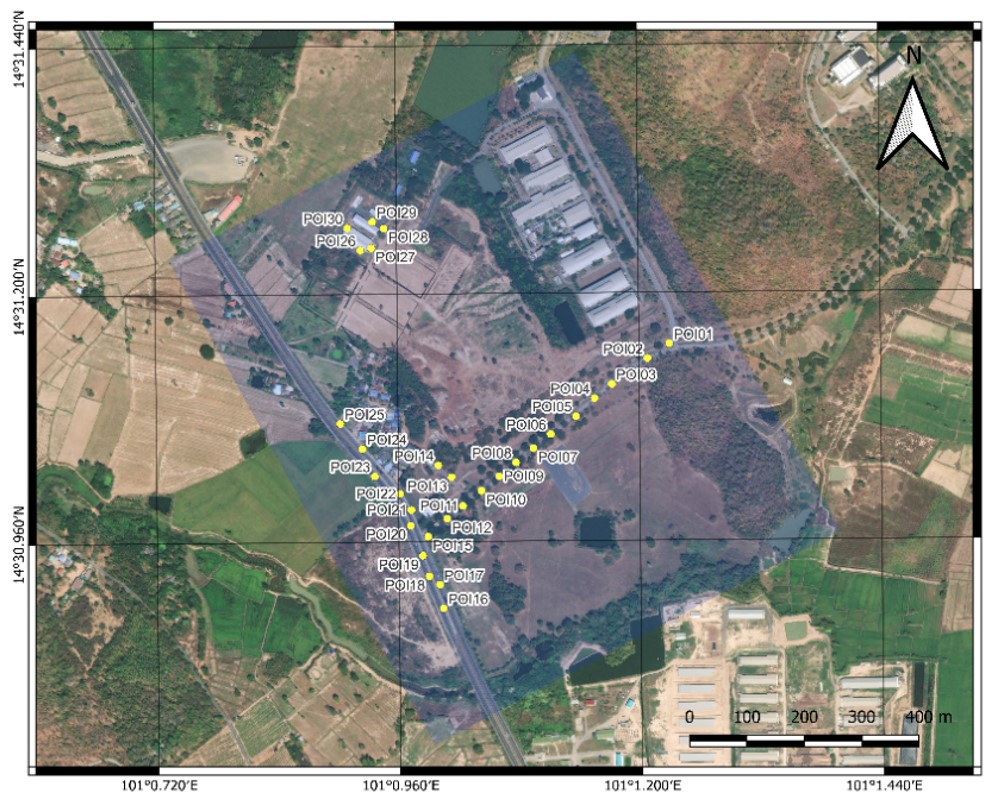

Photogrammetric Software

After defining the physical geometry of the mathematical model for the

small-format oblique camera system, this study utilized the multi-view

stereo techniques offered by both software programs to compare the

coordinates obtained from 3D measurements of 30 sample points in an

open field, as illustrated in Figure 5. These sample points were

strategically selected along roads or adjacent to buildings, chosen for

the ease of surveying in these locations and their relevance to future

design projects and related tasks. The RMSE results revealed

differences of 0.065 m in the horizontal component and 0.110 m in the

vertical component between the two software packages. While these

differences exist, they are not significant. Potential explanations for

these nominal error values include the state-of-the-art algorithms and

error correction methodologies used in both software packages.

Additionally, external factors such as temporal changes affecting light

conditions, minor camera misalignments, and systemic errors from GPS or

GNSS—such as atmospheric delays or multipath effects-might also

contribute to these variances.

Table 4: Proposed weights for camera-rig parameters

constraints

|

Parameter

|

Symbol

|

Weight

|

|

Translation

“Fully constrained”

|

TX

|

1 mm

|

|

TY

|

1 mm

|

|

TZ

|

1 mm

|

|

Rotation

“Free constrained”

|

RX

|

5 deg

|

|

RY

|

5 deg

|

|

RZ

|

5 deg

|

Table 5: Solutions for the relative translation and

rotation parameters in the case of PIX4Dmapper

|

Camera Model

|

TX

[mm]

|

TY

[mm]

|

TZ

[mm]

|

RX

[deg]

|

RY

[deg]

|

RZ

[deg]

|

|

3DM_V3_MID_25.2mm_6000x4000 (RGB)

|

Reference Camera

|

|

3DM_V3_BWD_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

-30.000

|

0.000

|

20.000

|

0.0000

|

45.0000

|

90.0000

|

|

Optimized values

|

-30.000

|

0.000

|

20.000

|

-0.3388

|

44.5712

|

90.3845

|

|

Uncertainties (sigma)

|

|

|

|

0.005

|

0.004

|

0.007

|

|

3DM_V3_FWD_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

30.000

|

0.000

|

20.000

|

0.0000

|

-45.0000

|

-90.0000

|

|

Optimized values

|

30.000

|

0.000

|

20.000

|

0.0873

|

-44.4435

|

-90.0238

|

|

Uncertainties (sigma)

|

|

|

|

0.003

|

0.004

|

0.004

|

|

3DM_V3_LFT_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

0.000

|

-30.000

|

20.000

|

-45.0000

|

0.0000

|

-180.0000

|

|

Optimized values

|

0.000

|

-30.000

|

20.000

|

-43.2676

|

0.1692

|

-179.8003

|

|

Uncertainties (sigma)

|

|

|

|

0.004

|

0.003

|

0.002

|

|

3DM_V3_RIT_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

0.000

|

30.000

|

20.000

|

45.0000

|

0.0000

|

0.0000

|

|

Optimized values

|

0.000

|

30.000

|

20.000

|

44.2985

|

1.1534

|

-0.4407

|

|

Uncertainties (sigma)

|

|

|

|

0.009

|

0.003

|

0.004

|

Table 6: Solutions for the relative translation and

rotation parameters in the case of Agisoft Metashape

|

Camera Model

|

TX

[mm]

|

TY

[mm]

|

TZ

[mm]

|

RX

[deg]

|

RY

[deg]

|

RZ

[deg]

|

|

3DM_V3_MID_25.2mm_6000x4000 (RGB)

|

Reference Camera

|

|

3DM_V3_BWD_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

-30.000

|

0.000

|

20.000

|

0.0000

|

45.0000

|

90.0000

|

|

Optimized values

|

-29.996

|

0.026

|

19.992

|

-0.4290

|

44.1488

|

90.4198

|

|

Uncertainties (sigma)

|

|

|

|

0.004

|

0.004

|

0.003

|

|

3DM_V3_FWD_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

30.000

|

0.000

|

20.000

|

0.0000

|

-45.0000

|

-90.0000

|

|

Optimized values

|

30.003

|

-0.016

|

20.004

|

0.1733

|

-43.9097

|

-89.9509

|

|

Uncertainties (sigma)

|

|

|

|

0.004

|

0.004

|

0.003

|

|

3DM_V3_LFT_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

0.000

|

-30.000

|

20.000

|

-45.0000

|

0.0000

|

-180.0000

|

|

Optimized values

|

0.015

|

-29.998

|

19.998

|

-44.2460

|

-0.1393

|

-179.7840

|

|

Uncertainties (sigma)

|

|

|

|

0.005

|

0.003

|

0.001

|

|

3DM_V3_RIT_35.7mm_6000x4000 (RGB)

|

|

|

|

|

|

|

|

Initial Values

|

0.000

|

30.000

|

20.000

|

45.0000

|

0.0000

|

0.0000

|

|

Optimized values

|

0.001

|

30.011

|

20.004

|

44.4579

|

1.1884

|

-0.3750

|

|

Uncertainties (sigma)

|

|

|

|

0.005

|

0.003

|

0.001

|

Table 7: RMSE values for anomaly detection in GCPs

based on the nadir photographs block

|

Processing Software

|

RMSE Scheme

|

Error [m]

|

|

Easting

|

Northing

|

Height

|

|

PIX4Dmapper

|

RMSE_PARTIAL_GCP

|

0.033

|

0.026

|

0.055

|

|

RMSE_PARTIAL_CP

|

0.063

|

0.061

|

0.075

|

|

RMSE_FULL_GCP

|

0.032

|

0.033

|

0.053

|

|

Agisoft Metashape

|

RMSE_PARTIAL_GCP

|

0.026

|

0.019

|

0.023

|

|

RMSE_PARTIAL_CP

|

0.033

|

0.014

|

0.043

|

|

RMSE_FULL_GCP

|

0.026

|

0.017

|

0.031

|

Table 8: RMSE values for the combined five-camera

block, based on the full GCPs

|

Processing Software

|

RMSE

|

Error [m]

|

|

Easting

|

Northing

|

Height

|

|

PIX4Dmapper

|

RMSE_FULL_GCP

|

0.016

|

0.016

|

0.019

|

|

Agisoft Metashape

|

RMSE_FULL_GCP

|

0.011

|

0.014

|

0.046

|

Figure 3: Comparison of deviations from forty-five

degrees for each oblique camera by two software packages. Dashed lines

indicate average angles: red for PIX4Dmapper and green for Agisoft

Metashape

Figure 4: (a) Distribution of points for anomaly

investigation, with partial GCPs represented as yellow triangles and

partial CPs as red triangles. (b) and (c) display RMSE value

comparisons for PIX4Dmapper and Agisoft Metashape, respectively

Figure 5: Distribution of the 30 sample points (yellow

circles) in the open field of the study area (blue area)

These minor error magnitudes underscore the proficiency of both software

programs in accurately representing the numerical model of the small-format

oblique camera. This finding is significant for the fields of aerial

mapping and 3D reconstruction, suggesting that industry professionals can

reliably use either software package for precision tasks. This offers

flexibility in tool selection based on criteria such as user experience,

computational speed, or software synergy, rather than solely on accuracy.

4. Conclusion

Small-format oblique camera systems, which simultaneously acquire aerial

photographs using one nadir camera and four oblique cameras, have gained

popularity in mapping due to their ability to capture multi-view images.

This capability yields a higher number of photos suitable for multi-view

stereo and point-cloud processing. However, the intrinsic

oblique effect in these systems can impact the accuracy of keypoint

generation, a crucial element in automated structure-from-motion software

processing. This study examines the camera rig parameters—specifically,

the relative relationship between the nadir camera and oblique cameras in

small-format oblique camera systems—using two popular photogrammetric

software packages: Pix4Dmapper and Agisoft Metashape. The objective was to

leverage the unique features of these software packages to enhance the

accuracy and quality of photogrammetric outputs generated by small-format

oblique camera systems.The research concludes that the

'3DM-V3' small-format oblique camera system can be parameterized and

numerically modeled as follows. The four sets of relative translation

parameters (Tx, Ty, Tz)reln,

where n ranges from 1 to 4, must be ‘fully constrained'. The other four

sets of relative rotation parameters (Rx, Ry,

Rz)reln, where n ranges from 1 to 4

between the reference camera and the other four oblique cameras, can

initially be approximated and later set as ‘free constrained' during

numerical processing. Other intrinsic camera parameters from all five

cameras will be numerically adjusted according to standard procedure. The

final camera model produced by bundle block adjustment (BBA) in both

software suites confirms the accuracy of the geometric model. PIX4Dmapper

yielded an RMSE of 0.023 m for the horizontal component and 0.019 m for the

vertical component, while Agisoft Metashape resulted in horizontal and

vertical RMSE values of 0.018 m and 0.046 m, respectively. The horizontal

and vertical RMSE differences calculated from both processing programs are

satisfactory, given the ground sample distance (GSD) of nadir photographs,

which is 2.34 cm, and the GCPs measured by GNSS RTK with 2-cm and 5-cm

accuracy for the horizontal and vertical components, respectively. For

future applications, it is paramount to define mathematical camera models

and parameterization when processing aerial photographs from small-format

oblique camera systems to ensures that the applied model in the software

mirrors real-world camera configurations, thereby aligning with genuine rig

geometries and producing accurate outputs.

Additionally, both software packages utilize the multi-view stereo

technique, which enhances 3D measurement accuracy, augments detail

interpretation, and supports classification. This tool is indispensable for

various engineering projects. The integration of oblique photographs

amplifies geometric efficiency in 3D mapping production, leading to denser

point clouds and heightened precision in depicting certain elements, such

as buildings or vegetation, more closely aligned with reality. This

technological advancement has transformed the mapping and surveying domain,

streamlining data collection and analysis for applications such as Building

Information Modeling (BIM), urban planning, and building control

legislation. The adoption of UAV-based oblique cameras further ensures

safer and more economical mapping and surveying operations, reducing the

need for manned aircraft and minimizing extensive ground surveys.

Acknowledgments

The authors express their gratitude to the Center of Excellence in

Infrastructure Management at Chulalongkorn University and InfraPlus Co.,

Ltd. for their support in providing the data, processing software, and

hardware used in this research.

References

[1] Remondino, F. and Gerke, M., (2015). Oblique Aerial Imagery: A Review.

In D. Frietsch (Ed.), Proceedings of Photogrammetric Week '15, 7-11

September, Stuttgart, Germany

. 75-83, Available:

http://www.ifp.uni-stuttgart.de/publications/phowo15/090Remondino.pdf.

[2] Gerke, M., Nex, F., Remondino, F., Jacobsen, K., Kremer, J., Karel,

W., Huf, H. and Ostrowski, W., (2016). Orientation of Oblique Airborne

Image Sets-Experiences from the ISPRS/EUROSDR Benchmark on Multi-Platform

Photogrammetry.

The International Archives of Photogrammetry, Remote Sensing, and

Spatial Information Sciences

, Vol. XLI-B1, 185-191.

https://doi.org/10.5194/isprs-archives-XLI-B1-185-2016.

[3] Jiang, S. and Jiang, W., (2017). On-board GNSS/IMU Assisted Feature

Extraction and Matching for Oblique UAV Images. Remote Sensing,

Vol. 9(8), 1-21.

https://doi.org/10.3390/rs9080813.

[4] Lin, Y., Jiang, M., Yao, Y., Zhang, L. and Lin, J., (2015). Use of

Oblique UAV Imaging for the Detection of Individual Trees in Residential

Environments. Urban Forestry & Urban Greening, Vol. 14(2),

404-412.

https://doi.org/10.1016/j.ufug.2015.03.003.

[5] Remondino, F., Toschi, I., Gerke, M., Nex, F., Holland, D., McGill,

A., Lopez, J. T. and Magarinos, A., (2016). Oblique Aerial Imagery for

NMA-Some Best Practices.

Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci.,

Vol. XLI-B4, 639–645.

https://doi.org/10.5194/isprs-archives-XLI-B4-639-2016.

[6] Jiang, S., Jiang, W., Huang, W. and Yang, L., (2017). UAV-Based

Oblique Photogrammetry for Outdoor Data Acquisition and Offsite Visual

Inspection of Transmission Line. Remote Sensing, Vol. 9(3), 1-25.

https://doi.org/10.3390/rs9030278.

[7] sUAS News. (2017). Ultra-Efficient Photogrammetry with Pix4Dmapper

Pro's MultiCamera Rig Processing, [Online]. Available: sUAS,

https://www.suasnews.com/2017/03/ultra-efficient-photogrammetry-pix4dmapper-pros-multi-camera-rig-processing/". [Accessed March 12, 2023].

[8] Colomina, I. and de la Tecnologia, P. M., (2008). Toward a New

Paradigm for High-Resolution Low-Cost Photogrammetry and Remote Sensing.

Proceedings of the ISPRS XXI Congress, Beijing, China. 3-11.

[9] Eisenbeiß, H., (2009). UAV Photogrammetry, Doctoral

Dissertation. Institute of Geodesy and Photogrammetry, Swiss Federal

Institute of Technology in Zürich. Switzerland

[10] Remondino, F., Barazzetti, L., Nex, F., Scaioni, M. and Sarazzi, D.,

(2011). UAV Photogrammetry for 3D Mapping and Modeling: Current Status and

Future Perspectives.

International Archives of the Photogrammetry, Remote Sensing, and

Spatial Information Sciences

, Vol. 38 (1).

https://doi.org/10.5194/isprsarchives-XXXVIII-1-C22-25-2011.

[11] Nex, F. and Remondino, F., (2014). UAV for 3D Mapping Applications: A

Review. Applied Geomatics, Vol 6(1), 1-15.

https://doi.org/10.1007/s12518-013-0120-x..

[12] Nex, F., Armenakis, C., Cramer, M., Cucci, D. A., Gerke, M.,

Honkavaara, E., Kukko, A., Persello, C. and Skaloud, J., (2022). UAV in the

Advent of the Twenties: Where We Stand and What Is Next.

ISPRS Journal of Photogrammetry and Remote Sensing

, Vol. 184, 215-242.

https://doi.org/10.1016/j.isprsjprs.2021.12.006.

[13] Gerke, M., Nex, F., Remondino, F., Jacobsen, K., Kremer, J., Karel,

W., Hu, H. and Ostrowski, W., (2016). Orientation of Oblique Airborne Image

Sets – Experiences From the ISPRS/EUROSDR Benchmark on Multi-Platform

Photogrammetry.

Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci.,

Vol. XLI-B1, 185–191.

https://doi.org/10.5194/isprs-archives-XLI-B1-185-2016.

[14] Karel, W., Ressl, C. and Pfeifer, N., (2016). Efficient Orientation

and Calibration of Large Aerial Blocks of Multi-Camera Platforms.

Int. Arch. Photogramm. Remote Sens. Spatial Inf. Sci.

, Vol. XLI/W4.

https://doi.org/10.5194/isprs-archives-XLI-B1-199-2016.

[15] Alsadik, B., Remondino, F. and Nex, F.m (2022). Simulating a Hybrid

Acquisition System for UAV Platforms. Drones, Vol. 6(11),

1-21.

https://doi.org/10.3390/drones6110314.

[16] Bannakulpiphat, T., Santitamnont, P. and Maneenart, T. (2022). A Case

Study of Multi-head Camera Systems on UAV for the Generation of

High-Quality 3D Mapping.

Research and Development Journal of the Engineering Institute of

Thailand

, Vol. 33(4).

[17] Pix4D. (2023). How Are the Internal and External Camera Parameters

Defined, [Online]. Available: Pix4D,

https://support.pix4d.com/hc/en-us/articles/202559089-How-are-the-Internal-and-External-Camera-Parameters-defined. [Accessed March 12, 2023].