Frontline Learning Research Vol. 7 No 4 (2019) 1

- 24

ISSN 2295-3159

1Ludwig-Maximilians-Universität

München, Germany

2Technische Universität München, Germany

3Universität Hildesheim, Germany

4 University Hospital, Ludwig-Maximilians-Universität

München, Germany

5 Universität Tübingen, Germany

6 Pädagogische Hochschule Freiburg, Germany

Article received 10 June 2018 / revised 7 May / accepted 30 August / available online 22 October

We propose a conceptual framework which may guide research on fostering diagnostic competences in simulations in higher education. We first review and link research perspectives on the components and the development of diagnostic competences, taken from medical and teacher education. Applying conceptual knowledge in diagnostic activities is considered necessary for developing diagnostic competences in both fields. Simulations are considered promising in providing opportunities for knowledge application when real experience is overwhelming or not feasible for ethical, organizational or economic reasons. To help learners benefit from simulations, we then propose a systematic investigation of different types of instructional support in such simulations. We particularly focus on different forms of scaffolding during problem-solving and on the possibly complementary roles of the direct presentation of information in these kinds of environments. Two sets of possibly moderating factors, individual learning prerequisites (such as executive functions) or epistemic emotions and contextual factors (such as the nature of the diagnostic situation or the domain) are viewed as groups of potential moderators of the instructional effects. Finally, we outline an interdisciplinary research agenda concerning the instructional design of simulations for advancing diagnostic competences in medical and teacher education

Keywords: diagnostic competence; simulation; medical education; instructional support; learning through problem-solving; scaffolding; teacher education

Diagnostic competences are important goals of many academic programmes, including medicine and teacher education programmes. Recently, the scientific understanding of the structure of diagnostic competences as well as their measurement have improved substantially (e.g., Abs, 2007; Heitzmann, 2014; Herppich et al., 2018; Tariq & Ali, 2013). However, with a better understanding of diagnosing and diagnostic competences, it became apparent how even advanced students and young professionals struggle to apply their knowledge to individual cases. This issue led to an emphasis on the question of how we can design and provide learning opportunities where higher education students can learn to diagnose in their fields. There is a need for more or less authentic situations in which conceptual knowledge can be acquired and applied to problems. However, providing real practice opportunities may not always be the most effective approach, because highly complex and dynamically changing real-life situations can be overwhelming for novice learners (Grossman et al., 2009). Therefore, approximations to real-life practice using simulations may sometimes be more effective in a higher education programme than just increasing the opportunity for real-life practice (e.g., Stegmann, Pilz, Siebeck, & Fischer, 2012). In this manuscript, we address how simulations can be designed to allow learners to make the most of a simulation.

Design features vary broadly across simulations in different studies. For instance, simulations differ in whether reflection phases are included or whether additional information can be accessed during the simulation. So far, we do not know enough about the instructional design of effective simulations, that is, which forms of instructional support (e.g., forms of scaffolding, explicit presentation of information) are linked to better learning processes or learning outcomes. There is also a broad scope of diagnostic situations which can be simulated. The simulation can be targeted to support learning to diagnose in the context of a patient interview or in direct interaction with one or several students in class. Simulations sometimes afford interaction with documents and materials rather than with people, as for example in diagnosing tentative causes of a patient’s fever from an X ray picture or diagnosing the causes of a failure to solve specific tasks in mathematics by analysing the method of calculation a student has used. The cognitive requirement in diagnosing might be quite different depending on these as well as other specific features of a situation. Thus, the question of the extent to which the effects of instructional designs generalize across different types of simulated diagnostic situations is relevant. The effectiveness of instructional support is also known to depend on interindividual differences. Most obviously, this is the case with different levels of prior knowledge, where maladjusted instruction has been shown to be not only ineffective but sometimes even detrimental to learning (Kalyuga, 2007). Other individual learning prerequisites include motivation, emotion or general cognitive abilities. What do we know about how interindividual differences in these variables influence the advancement of diagnostic competences in simulations? And what should we know in order to be able to design simulation-based learning environments which cater to the needs and preferences of individual learners?

However, before we can begin to address these design features and questions, we need a clear conceptualization of the competences that we want to foster with simulations. This will form the basis for further design steps. Therefore, the first goal of this article is to identify and discuss important components and relationships between components regarding diagnostic activities in the fields of both teacher and medical education. Based on a discussion of commonalities and differences between the fields, we propose a conceptual framework and sketch a research agenda addressing the facilitation of diagnostic competences in simulation-based learning environments.

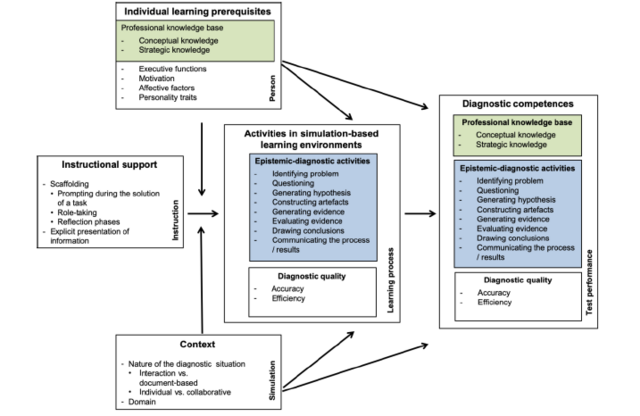

We first propose a conceptual framework, as displayed in Fig. 1, outlining an approach to fostering diagnostic competences with simulations which will guide our work on the aforementioned design features and questions. The main body of the article comprises an elaboration of the framework’s components. First, we address the two components of diagnostic competences in the framework: the knowledge which learners need to possess and the processes they need to master. Second, we elaborate on learning through simulations and on ways to support this process in order to maximize learning gains. Third, we introduce a range of relevant learning prerequisites and contextual factors. In all three parts, we selectively review the research in medical and teacher education and explain how this research ties into the construction of the framework.

In the second part of the article, we briefly propose sets of hypotheses and a possible methodological approach for a research programme based on the conceptual framework. The ultimate goal of the research programme is to advance the conceptual framework into a theory of learning to diagnose in simulations that can inform the design of simulation-based learning environments in medical and teacher education and possibly beyond.

In this section, we will first provide a rationale for why diagnostic competences are relevant not only in the medical context but also for teachers. We propose that despite differences in the professional practice in both fields, there are also substantial commonalities. We then explicate the premises for a conceptual framework.

Diagnosing means “recognizing exactly” or “differentiating” and is associated with the activities and processes of classifying causes and forms of phenomena (“diagnosing”, n.d.). These causes and forms are often not directly observable; they are latent or hidden and need to be identified through observable cues and by drawing inferences. Typically, diagnoses are needed as decision points for action and for interventions targeted at improving a problem. Professions develop, maintain and teach diagnostic activities and procedures which are considered reliable and trustworthy, and they also develop quality standards for diagnosing and for diagnoses. In this article, we consider two professions. One is a well-known example with respect to diagnosing, namely medicine. The other, teacher education, is less well-known for diagnosing, but research has increasingly referred to diagnosing as a relevant professional activity. In both medicine and education, diagnostic activities are considered components of professional problem-solving. In education, diagnosing is often regarded as a crucial precondition and an element of adaptive teaching, for example when a teacher assesses the performance of a student (Schrader, 2009, 2011). Some researchers in the field of teacher education have been critical about the use of the term “teacher diagnostics”, particularly regarding the possibly negative effects of wrongly labelling students in the school context (Borko, Roberts, & Shavelson, 2008). Also, in the medical field, researchers are considering the possible negative side effects of diagnoses, which can sometimes lead patients onto destructive paths (e.g. Croft et al., 2015). At times, terms such as “teacher assessment”, “teacher judgment” and “teacher decision-making” have been used instead in order to find more nuanced ways to describe the tasks and processes involved in continuously gaining and evaluating knowledge about students. However, these alternative conceptualizations continue to include teacher diagnostic activities (Herppich et al., 2018), and many current research approaches now use the term “teachers’ diagnostic competences” (Glogger-Frey, Herppich, & Seidel, 2018).

Of course, one may ask whether these professions and research strands share commonalities which go beyond terminological similarities. The link between medicine and teacher education seems risky at first glance. There are many salient differences between the professional practice of teachers and that of medical doctors, for example in terms of group size and the duration of diagnostic situations, relevant knowledge bases and the standardization of processes.

We propose in this paper that despite these differences, there are also substantial commonalities between the two professions with respect to diagnosing, which warrant a joint conceptual framework as well as joint research programmes building on the framework. This proposition builds on the following premises.

First, an underlying argument is based on the idea of knowledge communities. Humans try to improve ways of generating knowledge about their world to achieve, among other things, a higher accuracy in diagnoses of problems and, ultimately, more adequate follow-up actions. In this process, humans are capable of overcoming their intuitive epistemic mechanisms by developing more systematic knowledge creation activities (e.g., Stanovich, 2011). Knowledge communities maintain, share, and teach the competences needed to engage in these more systematic knowledge creation activities. They also contribute to developing quality criteria like standards for diagnosis procedures, reporting diagnoses and discussing outcomes of the diagnostic process with other people. Professions can be considered such knowledge communities. At this point, one might object to our comparison of professions by arguing that diagnostic processes in the medical profession show a higher degree of standardization compared to those in the educational domain. However, we do not think this difference makes our comparison invalid. We assume that the field of education would benefit from a higher degree of standardization in some diagnostic situations, because standardization could lead to more reliable and valid diagnostic results than intuitive approaches. However, medicine entails common diagnostic situations where highly standardized and algorithmic diagnostic activities are just not feasible. An example would be primary care, when doctors need to diagnose and treat many patients in short time frames. We thus assume that standardization is a relevant dimension for both professions, doctors and teachers, but a higher degree of standardization cannot generally be considered a sign of more advanced professionalization.

Second, it seems plausible that effects of instructional interventions may rather generalize to cognitively similar diagnostic situations across the domains than to cognitively dissimilar situations within one domain (see Kirschner, Verschaffel, Star, & Van Dooren, 2017). Consider, for instance, instructional guidance for learning to interpret evidence from several different sources when a teacher tries to determine a student’s level of mathematical understanding or misconceptions leading to his mistakes, compared with a general medical practitioner trying to determine the causes of a patient’s symptoms by reading the patient’s file, conducting a physical examination and using newly generated laboratory parameters. It seems plausible that both medical students and pre-service teachers can benefit from guidance for generating a set of early hypotheses which help them prioritize evidence and search for and select necessary evidence in a goal-oriented manner. However, this type of support might be less helpful in the contexts of collaborative diagnosing, for instance, between an internist and a radiologist. In the latter context, medical students’ and young doctors’ critical difficulties in sharing relevant information based on their meta-knowledge of the other specialists’ knowledge, tasks and instruments were identified (Tschan et al., 2009). An effective instructional intervention for information sharing might be quite different from instruction in support of early hypothesis generation.

Third, there are also some striking commonalities between teachers’ and physicians’ professional practice. They both engage in decision-making related to valued characteristics in other people (that is, health and education), they both must integrate various different knowledge bases and they both must master diagnostic activities like generating hypotheses or drawing conclusions (Fischer et al., 2014). And in both domains, higher education programmes increasingly aim at advancing their students’ competences through hands-on experiences and reflections on practical experience (e.g., Grossman & McDonald, 2008).

Fourth, the research strands from these two domains also have interesting commonalities. Most importantly, they consider diagnosing as part of professional action related to cases. The diagnostician uses observations and data collected with a specific goal in mind. A diagnosis, then, is based on data and is the result of a systematic and reflective process (Helmke, 2010). Understood this way, diagnosing is not restricted to medical decision-making in the context of patients’ diseases; instead, diagnosing can be considered more generally as the goal-oriented collection and interpretation of case-specific or problem-specific information to reduce uncertainty in order to make medical or educational decisions.

After explicating why we think it makes sense to analyse the diagnostic competences of medical practitioners and teachers with a joint conceptual framework, we now want to introduce this conceptual framework in more detail.

In our conceptual framework (Fig. 1), we consider diagnosing as a process of goal-oriented collection and integration of case-specific information to reduce uncertainty in order to make medical or educational decisions.

Figure 1. Fostering diagnostic competences with simulations: a conceptual framework of factors.

Different knowledge bases involved in diagnosing exist. The conceptual framework builds on a recent attempt to integrate the different types of classification into a two-dimensional classification. This classification distinguishes knowledge facets (content knowledge, pedagogical content knowledge and pedagogical knowledge) and knowledge types (knowing that, knowing how and knowing why and when) (Förtsch et al., 2018). The conceptual framework further entails diagnostic activities like generating hypotheses or evaluating evidence. Diagnostic competences are defined as individual dispositions enabling people to apply their knowledge in diagnostic activities according to professional standards to collect and interpret data in order to make high-quality decisions. Domains differ in what is considered an appropriate engagement in these diagnostic activities and to what degree these activities are formalized and standardized.

In the following sections, each component is explained in more detail. First, we will have a closer look at the professional knowledge bases and at diagnostic activities.

In both domains, medicine and teaching in primary and secondary schools, there is (1) research focusing predominantly on the types and combinations of knowledge needed for successful diagnoses, for example conceptual knowledge and strategic knowledge. In both domains, there is also (2) research focusing on the process of diagnosing and the different activities diagnosticians engage in to derive accurate and well-justified diagnoses, for example generating hypotheses or evaluating evidence. These two strands of research will be reviewed in this section.

3.1.1. Research focusing on the professional knowledge base for diagnosing

A common differentiation of the professional knowledge base in medicine suggests two knowledge types: (a) biomedical knowledge and (b) clinical knowledge (Patel, Evans, & Groen, 1989). (a) Biomedical knowledge includes knowledge about the normal functioning of the human body as well as pathological mechanisms or processes in the causation of diseases (Boshuizen & Schmidt, 1992; Kaufman, Yoskowitz, & Patel, 2008). (b) Clinical knowledge is knowledge about symptoms or symptom patterns of diseases, their typical courses and factors indicating a high likelihood of a particular disease, such as patient characteristics or environmental factors. It also comprises knowledge about appropriate therapeutic treatments (Van De Wiel, Boshuizen, & Schmidt, 2000). Research in medical expertise and in medical education has focused on how these knowledge types are used when diagnosing patient cases. One main interest has been how novices, intermediates and experts differ in their application of these types of knowledge. The main goal of this comparison was to understand the processes and changes involved in expertise development. Cognitive psychologists working in the context of medical expertise and medical education have suggested models which advance the mere classification of knowledge according to content. For example, Stark, Kopp and Fischer (2011) distinguished conceptual knowledge from practical knowledge. Conceptual knowledge concerns concepts and their interrelations, whereas practical knowledge describes how conceptual knowledge is used in problem-solving situations. Practical knowledge is further divided into the knowledge of steps which can be taken in problem-solving (strategic knowledge) and knowledge about the conditions of the successful application of these steps (conditional knowledge). The distinction between conceptual and practical knowledge has received validating support from empirical studies inside and outside the medical domain (see Heitzmann, 2014, for an overview).

Research in medical education also offers specific accounts of the changes that the knowledge bases undergo in the course of developing expertise. The most prominent change is that of encapsulation into illness scripts, as developed by Boshuizen and colleagues (1992). This account proposes the qualitative entanglement of biomedical and clinical knowledge as a key to better understanding the development of diagnostic expertise (e.g., Boshuizen & Schmidt, 2008; Schmidt & Boshuizen, 1993; Woods, 2007). Through repeated confrontation with clinical cases, biomedical knowledge gets “encapsulated” (Mamede et al., 2012). That these two types of knowledge are encapsulated means that biomedical knowledge gets interconnected and integrated with clinical features. Learners connect the knowledge of underlying biomedical mechanisms with symptoms of a disease, with patient characteristics and with the conditions under which a certain disease emerges. This encapsulation yields so-called “illness scripts” (Charlin, Boshuizen, Custers, & Feltovich, 2007; Schmidt & Rikers, 2007). Biomedical knowledge in an encapsulated form is still important for a coherent understanding of a disease, even though it might not reach conscious attention (Woods, 2007). An illness script also contains knowledge of the relations between different diseases as well as of cases of a disease the physician has previously encountered (Schmidt & Rikers, 2007).

Shulman (1987) has proposed a differentiation of three components of the professional knowledge base: (a) content knowledge about the subject matter being taught, such as knowledge of mathematics or biology, (b) pedagogical content knowledge about the teaching and learning in a subject area, for example typical misconceptions in physics or how to explain certain grammatical structures, and (c) pedagogical knowledge about teaching and learning independent of the subject area, including for example knowledge about classroom management, student motivation or learning strategies. Current research addresses the roles of these knowledge types (Tröbst et al., 2018) in professional action, including diagnosis. Important open questions include how the different components of professional knowledge interact (e.g., Tröbst et al., 2018) and possibly change through experience and how different knowledge components are restructured and possibly integrated (“amalgamation”, Tröbst et al., 2018) through knowledge application in decision-making and problem-solving contexts during the development of teaching expertise. Stürmer, Seidel, & Holzberger (2016) have recently hypothesized that knowledge transformation mechanisms in teacher expertise development are similar to those hypothesized for medical expertise development. In particular, these mechanisms can be characterized as a systematic restructuring rather than a mere accumulation of knowledge. As a consequence of this restructuring, performance increases while the consciously available knowledge decreases, similar to the process of encapsulation hypothesized in research on clinical reasoning.

A cross-domain foundation for empirical research on how to support the development of diagnostic competences requires a cross-domain conception of professional knowledge. While the distinction of conceptual and practical (strategic and conditional) knowledge appears applicable to the professional knowledge of teaching as well, the question whether the distinction of content knowledge, pedagogical content knowledge, and pedagogical knowledge has a counterpart in medicine is currently under discussion. Recently, there have been attempts to integrate the knowledge classifications across domains (Förtsch et al., 2018; Hargreaves, 2000). Förtsch et al. (2018) proposed a two-dimensional model integrating what they call facets of knowledge (content knowledge, pedagogical content knowledge and pedagogical knowledge) and types of knowledge (knowing that, knowing how and knowing why and when). The integration of pedagogical knowledge and pedagogical content knowledge in models of medical diagnosing particularly challenges established viewpoints. For instance, Förtsch et al. (2018) emphasize the importance of scrutinizing and communicating diagnostic processes and outcomes with colleagues as well as with patients and their families as an example of the need for this integration of pedagogical knowledge and pedagogical content knowledge into an overall model.

3.1.2. Research with a focus on diagnostic activities

Knowledge is applied in diagnostic activities in order to produce a diagnosis. Diagnostic activities can be characterized in different ways (e.g., Barrows & Pickell, 1992; Gräsel, 1997; Moskowitz, Kuipers, & Kassirer, 1988). There are repertories of professional activities in which the different bodies of knowledge can be applied. Teacher education has emphasized teachers’ assessments of their students’ knowledge and skills. This research has focused on the accuracy of teachers’ judgments of students’ learning prerequisites and outcomes in primary and secondary school (Klug, Bruder, Kelava, Spiel, & Schmitz, 2013; Spinath, 2005; Südkamp, Kaiser, & Möller, 2012) as well as on the role of teachers’ diagnoses in formative assessment (e.g., Glogger-Frey et al., 2018; Bennett, 2011; Hattie, 2003). However, diagnostic activities in teaching have not yet been systematically investigated (e.g., Herppich et al., 2018; Shulman, 2015).

We introduce a taxonomy of epistemic activities which was developed in a multidisciplinary group of scientists from different fields (biology, mathematics, medicine, psychology and computer sciences, Fischer et al., 2014). The scientists agreed that the activities in the taxonomy were all relevant for generating knowledge in their domains. The taxonomy differentiates eight activities which we illustrate with examples from medicine and teaching:

(1) problem identification (e.g. a patient reports non-specific symptoms such as shortness of breath; a student wrongly answers a question in class);

(2) questioning (e.g. a doctor asks what could be the reason for the symptoms; a teacher asks what could be the reason for a student’s error);

(3) hypothesis generation (e.g. a doctor suspects a specific disease, such as a pulmonary embolism; the teacher suspects a specific misconception);

(4) the construction and redesign of artefacts (e.g. a medical report which prescribes further examination; the development of a task which provides insight into the presence of a misconception);

(5) evidence generation (e.g. conducting required further examination, for example through computed tomography; observation of the student’s solution of the task);

(6) evidence evaluation (e.g. evaluation of the computed tomography with radiological signs of a pulmonary embolism; evaluation of the solution of the task with some but not all of the signs for the hypothesized misconception);

(7) drawing conclusions (e.g. deciding that the most likely cause of the patient’s symptoms is a pulmonary embolism; deciding that the most likely reason for the student’s error is the assumed misconception, which impedes further learning); and

(8) communication and scrutinization (e.g. a medical report with the diagnosis of a pulmonary embolism for another doctor; informing another teacher about the discovered misconception held by a certain student so that teacher can adapt the teaching).

As one can see, the eight epistemic activities can be translated into eight diagnostic activities, because diagnosing is a form of knowledge generation.

Diagnosing may not always require all eight epistemic-diagnostic activities, and no generally valid order is assumed for these eight activities. Rather, the number of steps and their order depend on the specificities of the case, the situation and the expertise of the individual diagnostician, including information available from previous diagnostic processes. Doctors or teachers may skip activities (probably because of preceding knowledge encapsulation; Boshuizen, Schmidt, Custers, & Van de Wiel, 1995) or may repeat activities in a circular fashion. The number of activities or their order may, therefore, not be reliable indicators of the quality of the diagnostic process. Rather, the quality of the single activities and their combinations in sequences may be better indicators. The quality criteria applied to an activity and to sequences of activities are not general but are typically implied by professional standards in a specific domain.

It became clear early in research on diagnostic processes and strategies in medicine that diagnostic activities, such as the eight activities elaborated above, do not sufficiently characterize the requirements for appropriate cognitive performance. Research on the development of expertise in medicine indicated that inexperienced doctors seem to engage in most or all of these diagnostic activities, whereas experts seem to skip many steps and arrive at their conclusions more quickly and intuitively, a finding which can be explained by the encapsulation process introduced above.

It should be noted that in diagnostic situations with an emphasis on generating hypotheses, other diagnostic activities than the eight outlined above, which focus on confirming hypotheses, can come into play. In these hypothesis-generating situations, the focus is on filtering ongoing information regarding its relevance for the diagnostic task. Then a chain occurs of inferences between observations and knowledge schemata, which provide explanations of observational patterns and their relationships. These processes have been identified in teacher education using the concept of professional vision (Gaudin & Chaliès, 2015; Sherin, Jacobs, & Philipp, 2011). In professional vision research, these diagnostic processes are referred to as noticing relevant information and as knowledge-based reasoning, including both aspects of explanations as well as predictions.

Even if not every single diagnostic situation is covered by them, classifications of diagnostic activities, such as the epistemic activities described above (Fischer et al., 2014), can be used as a descriptive framework with a high degree of validity for a broad variety of diagnostic situations within and across domains. However, the set of activities should not be understood as a revival of the scientific method, a style of scientific thinking focusing solely on hypothetico-deductive arguments which has dominated many science education curricula (Kind & Osborne, 2017). Different professions develop different standards for what it means to engage effectively and efficiently in these epistemic-diagnostic activities, how explicitly they should be conducted and how their results should be documented and communicated. For example, in the medical domain, there are explicit expectations regarding which diagnostic activities are considered necessary and in which order they should ideally be conducted (e.g. generating hypotheses based on some early information, then systematically collecting or generating evidence to test and exclude alternative hypotheses; Kassirer, 2010). Medicine has also developed practices and associated standards on how the process and the result of the diagnosing should be documented in written reports to colleagues. In the domain of teaching in schools, the diagnostic process of teachers in their classrooms is far less formalized, and with few exceptions, reports to colleagues are somewhat informal and are oral as opposed to following a schema or being written.

The eight diagnostic activities, based on the eight epistemic activities presented above, can provide a common language with which to compare what people do to generate knowledge in differently structured diagnostic situations and in different domains; what their emphases are; what parts of the process are more formalized; which parts are communicated and how, etc.

Based on the suggested (joint) notion of diagnostic competences for medical and teacher education, we will now shift the focus to possibilities for facilitating the development of diagnostic competences in higher education.

The application of knowledge in diagnostic activities is considered crucial to the development of diagnostic competences (e.g., Kolodner, 1992). Possibilities for the application of knowledge are typically part of real-life professional practice and not, as such, part of higher education programmes. It was shown in several domains that professionals engage with problem-solving in a specific way to further develop their competences (Ericsson, 2004). To develop professional expertise, successful learners show similar ways of practising, while they differ especially in their intentional, focused and repeated practising of the challenging aspects of a task. This idea has been used in medical as well as in teacher education (Berliner, 2001). In both fields, real-life practice might not be the most promising opportunity for developing diagnostic competences for students who are still in higher education programmes. In fields in which real-life situations are highly complex and dynamic, novices such as students can easily be overwhelmed. Under these circumstances, real-life approximation-of-practice (Grossman et al., 2009) can help to avoid the overwhelming of learners by reducing the complexity of the practical situation and by providing additional guidance (Gartmeier et al., 2015). Going beyond representations which illustrate practice for students, approximations enable engaging the learners with important aspects of practice (Grossman et al., 2009). Several instructional approaches have been developed which can guide the design of learning environments allowing the practising of professional tasks (e.g., van Merriënboer & Kirschner, 2018). In this article, we focus on educational simulations as one particularly promising approach which can serve as approximations-of-practice. There are economical, practical and ethical reasons for using simulations in educational settings (Ziv, Wolpe, Small, & Glick, 2003). In contrast to engaging in real practice, approximations-of-practice using simulations allows for engagement in critical but rare situations which do not often happen during internships (or, if they do happen, the intern is not the one asked to professionally address the issue). Approximations-of-practice through simulations also allow learners to engage in very difficult situations requiring repeated practice. Trying a difficult technique or intervention repeatedly is typically impossible or is at least undesirable in real encounters with patients or students. Thus, the main challenge is to discover how simulations can help overcome the shortcomings of real practices, such as low base rates in certain critical situations or the lack of opportunities for repeated practice. However, another main challenge in designing simulation-based learning environments is learning how simulations can offer opportunities for knowledge application and practice without overwhelming the learner.

In the remainder of this section, we briefly introduce the aim and different types of simulations before reviewing research on using simulations to measure and facilitate diagnostic competences.

A simulation is a model of a natural or artificial system with certain features which can be manipulated. The aim of a simulation is to arrive at a better understanding of the interconnections of the variables in the system or to put different strategies for controlling the system to the test (Wissenschaftsrat, 2014, building on Frasson & Blanchard, 2012; Shannon, 1975). In research on learning and instruction and medical education, two different types of simulations can be distinguished by their functions: 1) In discovery or inquiry learning, simulations represent a segment of reality. Through manipulating variables, learners can build knowledge about those variables and their interconnections, for example chemical reactions (Linn, Lee, Tinker, Husic, & Chiu, 2006), in simulated systems (de Jong, 2006). This type of simulation is suitable for acquiring conceptual knowledge of the interconnection of entities in complex systems (de Jong, Hendrikse, & Meij, 2010). 2) In simulation training, learners get the opportunity to act in a simulation and thereby develop complex skills. Examples may include pilots’ training in landing an airplane (Landriscina, 2011) or the enactment of a surgery in medical education (Al-Kadi & Donnon, 2013). This type of simulation has been used successfully in different areas, for example in economics (Ahn, 2008), in management (Stewart, Williams, Smith-Gratto, Black, & Kane, 2011), in nursing (Smith & Barry, 2013) and in medical education (Siebeck, Schwald, Frey, Röding, Stegmann, & Fischer, 2011; Stegmann et al., 2012; Cook et al., 2012; Okuda et al., 2009).

There are no strict boundaries between these two types of simulations. For example, through explorations of a simulation in an inquiry environment, learners can build conceptual knowledge about the interconnections between variables and later apply this knowledge in training to further develop their diagnostic or intervention-related competences.

The second type of simulation seems more appropriate for fostering diagnostic competences, because in higher education, the opportunities for knowledge application are rather scarce. With this focus, a simulation is a learning environment in which (1) a segment of reality (e.g. a professional situation) is presented in a way which enables engagement in diagnostic activities (e.g. through presenting the behaviour of a student or the results of a diagnostic test of a patient). In a simulation of this type, (2) learners’ actions influence the further development of the system.

Thus, a central goal of learning with simulations is to provide training opportunities in which learners can take diagnostic actions in cases with a certain similarity to cases in their professional practice (Gartmeier et al., 2015; Shavelson, 2013). In this sense, both digital simulations and role-play (e.g. taking on the role of a doctor or a patient in an anamnestic role-play or of a teacher or student in a diagnostic interview) have been used as simulation-based learning environments. In medical education, learning via simulations in skill labs is already common practice (Peeraer et al., 2007). There are empirical studies with simulations, such as role-play with standardized patients (Bokken, Linssen, Scherpbier, van der Vleuten, & Rethans, 2009), and the findings show that students benefit not only from directly interacting with a standardized patient but also from observing the interaction of a peer with a standardized patient (Stegmann et al., 2012). A wealth of empirical studies have recently been summarized in meta-analyses, establishing a medium positive effect for simulation-based learning in contrast to other forms of training for medical areas as different as clinical reasoning or resuscitation (Cook, 2014). In teacher education, there have been studies which assessed diagnostic competences with a computer-based simulation of a virtual classroom (Kaiser, Helm, Retelsdorf, Südkamp, & Möller, 2012; Südkamp, Möller, & Pohlmann, 2008) or teachers’ recommendations for the type of school a student should choose after primary school (Gräsel & Böhmer, 2013). However, the purpose of those simulations was not to foster the development of diagnostic competences but rather to measure the learners’ level of diagnostic competence. There has also been emphasis in teacher education on so-called clinical experience (Grossman, 2010) and clinical simulations (Dotger, 2013), which aim at advancing pre-service teachers’ knowledge and skills.

De Coninck, Valcke, Ophalvens and Vanderlinde (2019) recently reviewed research on simulations in teacher education. One important conclusion was that simulations need to be embedded in instructionally well-designed learning environments to be effective. Building on earlier work (e.g., Gartmeier, Bauer, Fischer, Karsten, & Prenzel, 2011), De Coninck et al. (2019) further developed an approach to using simulations in learning environments. Their goal was to facilitate teachers’ competence in engaging in effective communication with parents. They suggested four instructional principles for simulation-based learning environments (e.g. invoke cyclical process, including simulation-based experience, feedback and reflection) and found preliminary evidence for the perceived usefulness of online and face-to-face simulations which were designed using these principles. Although this line of research is highly promising, it has not yet yielded systematic experimental evidence with objective measures of competence which would help establish a model of instructional design for simulations that is based on a theory.

To sum up, both medical and teacher education have developed conceptual accounts of the pedagogical potentials of simulations and have brought forward empirical studies indicating that simulations can provide opportunities for engaging students in realistic activities and that they can help both in assessing and in advancing competences, including diagnostic competences. Research on using simulations to advance competences has had a longer tradition and has yielded more empirical studies in medical education than in teacher education. Therefore, there is more systematic knowledge available in medical education on the question of the effective design of simulation-based learning environments.

While it is obvious from this available knowledge that there are clear advantages to the use of simulations, there is also theory and evidence that learners can be overwhelmed by being involved in solving complex problems. This is especially true for learners with little or no prior knowledge necessary to tackle the task (e.g., Renkl, 2014). In the next section, we will therefore analyse how learners can be effectively guided while learning with simulations.

There is ample evidence in research on simulations that learners benefit from additional instructional support (Cook et al., 2013; Lazonder & Harmsen, 2016, Wouters & van Oostendorp, 2013). Cook et al. (2013) analysed experimental studies in medical education regarding the effectiveness of 13 different design features. For instance, they studied whether the simulated scenarios were highly interactive, whether they differed widely in difficulty or offered a broad clinical variation and what the effects of repetitive, distributed and collaborative practice were. Effects were greater for more interactive, repetitive and distributed experiences. A broader range of difficulty and clinical variation as well as the availability of feedback and collaborative practice were only effective on some of the outcome measures considered but did not show consistent positive effects. Though interesting, the design features which Cook et al. (2013) considered were focused more on the training regimen applied in the simulation, that is, how long and in which sequence learners should engage in which kinds of scenarios. However, the meta-analysis did not address how learners were instructionally supported while dealing with the simulated scenarios, that is how their problem-solving within the scenarios was supported. We therefore conclude that there is urgently missing evidence from primary studies with respect to more theoretically grounded mechanisms of instructional support.

But a promising instructional design concept in this respect is scaffolding, which is adaptive support during the solution of a task. During scaffolding, a teacher, peer or computer-based system takes over elements of the task, so the learner must carry out only a part of the task within their reach (Wood, Bruner, & Ross, 1976). Scaffolding thus enables a learner to solve problems they would not be able to solve without that support (Quintana et al., 2004; Wood et al., 1976). The main functions of scaffolding include directing the attention to relevant but not obvious aspects of a task, reducing the degrees of freedom during the solution of a task, supporting learners in focusing on the goal, highlighting critical features of the task, supporting learners in dealing with failure and frustration and demonstrating important steps (Wood et al., 1976; see also Pea, 2004). A central aspect of scaffolding is that it is flexibly adapted to a learner’s progress. The goal of scaffolding is to support the learner in a way which increasingly makes self-regulated problem-solving possible (cf. Wecker & Fischer, 2011). In the context of simulations, we consider three forms of scaffolding as particularly relevant.

1) Prompting during the solution of a task. To facilitate learners’ task solutions, they can be supported with prompts (or hints), a basic form of scaffolding. Numerous empirical studies provide evidence for the effectiveness of support during the solution of tasks with respect to the application of knowledge. For instance, by means of socio-cognitive scaffolds (Fischer, Kollar, Stegmann, & Wecker, 2013), it is possible to support learners who must solve problems in collaboration with others. Scaffolds which guide learning partners to reciprocally refer to each other’s contributions were found to be particularly effective (Vogel, Wecker, Kollar, & Fischer, 2017). Prompts supporting the learners’ metacognition can also help them monitor their own learning processes (Bisra, Liu, Nesbit, Salimi, & Winne, 2018). In some cases, metacognitive prompts were only successful in combination with other kinds of instructional support (Berthold, Nückles, & Renkl, 2007; Roll, Holmes, Day, & Bonn, 2012; Stark, Tyroller, Krause, & Mandl, 2008).

2) Role-taking. In simulated diagnostic situations, learners can take on different roles: (a) the role of the diagnosing person (a physician or a teacher), (b) the person with the characteristic to be diagnosed (a patient or a student) or (c) an observer. It is often regarded as a necessary prerequisite for competence development that learners actively engage with learning tasks (Schank, Berman, & Macpherson, 1999). Therefore, it seems plausible that the role of the diagnosing person can particularly foster the development of diagnostic competences. But a particular challenge for diagnostic situations is that it is often helpful if the diagnosing individual takes the perspective of the patient or the student. In teaching, the diagnosis of a misconception is facilitated if the diagnostician manages to relate a student’s observable solution strategies to potential misconceptions. Hence, to take the perspective of the student may be an important part of the task of diagnosing misconceptions. Therefore, role-taking in a simulation can be regarded as a form of part-task practice (i.e. engaging just in the emulation of a student’s cognitive processing that constitutes part of the diagnostic task; see also 4C/ID, e.g. van Merriënboer & Kirschner, 2018, with respect to part-task practice), which reduces the complexity of the task. Hence, in line with the definition of scaffolding mentioned above, role-taking can be regarded as a form of scaffolding. Empirical findings show that acting in the role of the person with the characteristic to be diagnosed can have a positive effect on the development of diagnostic competences. The quality of perspective-taking is fostered by directly telling the learners to take over a role (Goeze, Zottmann, Vogel, Fischer, & Schrader, 2014). Research on vicarious learning (cf. van Gog & Rummel, 2010) has also provided some evidence suggesting that the role of the observer can positively affect diagnostic competences. In a study in which doctor–patient communication was simulated, medical students learned just as much whether they participated actively or just observed the simulation (Stegmann et al., 2012). Further systematic research is needed to answer the question of how role-taking affects the development of diagnostic competences.

3) Reflection phases. Another promising form of scaffolding in simulations is adding reflection phases with the goal of reducing the time pressure which otherwise often exists in diagnostic situations in which a doctor or a teacher needs to act immediately. Thus, a more detailed planning of further steps is possible. Reflection can help learners adequately generate courses of action. To monitor learners’ own learning and performance is another important goal of competence development. Besides feedback from other individuals (Hattie & Timperley, 2007), another important source of information for monitoring their own performance is feedback which learners generate themselves in reflection phases (Nicol & Macfarlane-Dick, 2006). In reflection phases, instructional material or an instructor can encourage learners to think about the goals of a procedure, to analyse their own performance and to plan further steps. Adding reflection phases to foster medical diagnostic competences has turned out to be a successful form of scaffolding (Ibiapina, Mamede, Moura, Eloi-Santos, & Van Gog, 2014; Mamede et al., 2012; Mamede et al., 2014). However, these initial findings from medical education are far from robust and conclusive. And reflection phases can be of various lengths, can be more or less structured by guiding questions, can take place in individual or collaborative settings and can also take place before, during or after working with the simulation. The extent to which the effects of reflection phases generalize to diagnostic competences in teaching also remains an open question.

Explicit presentation of information. Scaffolded problem-solving is often contrasted with learning through lectures and book-reading. This explicit presentation of information can provide learners with additional conceptual or strategic knowledge, for example through additional lecture videos, which learners can access before, during or after work with the simulation. An adequate professional knowledge base which learners can apply to diagnostic tasks plays a major role in an early phase of competence development (Anderson & Lebiere, 1998; Schank et al., 1999; VanLehn, 1996). There is also evidence from learning with worked examples, in which learners with unfavourable learning prerequisites (e.g. low prior knowledge or poor self-regulatory skills, van Gog & Rummel, 2010) can benefit from the explicit presentation of information (e.g., Moreno, 2004). There is also research on the question of how phases of learner-directed inquiry and discovery activities can be productively combined with the explicit presentation of information. Research seems to suggest that there is a “time for telling” (Schwartz & Bransford, 1998; Wecker, Rachel, Heran-Dörr, Waltner, Wiesner, & Fischer, 2013); however, systematic research on combining phases of explicit presentation of information, scaffolding and unguided problem-solving in simulation-based learning is scarce.

To conclude, existing research on complex learning environments, including simulations, suggests that learning is more effective when the environments include instructional support (Lazonder & Harmsen, 2016; Wouters & van Oostendorp, 2013). We hence suggest investigating the effects of different approaches to instructional support in simulations, with a focus on concepts with a strong theory-based foundation. A promising example of such an instructional concept is scaffolding. Accordingly, we integrate into our conceptual framework different forms of scaffolding which we consider particularly promising in the context of learning to diagnose in simulations; these include support during problem-solving activities via prompts as well as role-taking and reflection phases (see the box on the left in Fig. 1). We also integrate the explicit presentation of information; there is “a time for telling” about domain concepts and strategies, and the timing does not seem to be arbitrary. Integrating both into the framework, the different forms of scaffolding and the explicit presentation of information, will enable research on their combination or succession in simulation-based learning, for example prompts which remind learners to implement a strategy they developed in the reflection phase or reflection phases which follow a phase of self-directed problem-solving with the explicit presentation of information introducing the relevant concepts for reflection.

The effects of simulations and instructional guidance on diagnostic competences will likely depend on differences between learners and on the characteristics of the simulated situation. The conceptual model entails important individual prerequisites and contextual factors as possible moderators of the effects of simulations and guidance (Fig. 1).

3.4.1. Learning prerequisites

It is unlikely that a simulation-based learning environment or any kind or combination of instructional support could have equally positive effects for all learners regardless of their learning prerequisites.

In research on learning and instruction, there is evidence of the moderating effects of individual learner characteristics. Such effects have been called aptitude-treatment interactions (Snow, 1991). Not much is known so far about aptitude-treatment interaction effects in learning with simulations. A comprehensive research programme on instructional support for the development of diagnostic competences in simulation-based learning environments must address the question of how important these prerequisites are for novel, dynamic contexts. In what follows, we suggest some promising cognitive, affective and personality-related moderators. These may serve as starting points for more systematic research on how learning prerequisites moderate the effects of simulation-based learning with and without additional instructional support.

Possibly the most important single cognitive prerequisite is prior knowledge (Gerard, Matuk, McElhaney, & Linn, 2015). Instructional support approaches which are highly effective for learners with low prior knowledge can be much less effective for or even detrimental to learners with more advanced prior knowledge (expertise reversal effect, Kalyuga, 2007).

In novel tasks (where specific prior knowledge and experience are absent), general cognitive abilities have been shown to be highly predictive for learning. In particular, learners with high levels of fluid intelligence seem to have an advantage when engaging in new and complex tasks (cf. Schwaighofer, 2015). So-called executive functions have also come into focus in research on learning and instruction. Executive functions are responsible for self-regulation and include the ability to update and monitor representations in working memory, the ability to shift between parts of the task or between different mental representations and the ability to inhibit automatic or dominant responses (Miyake & Friedman, 2012). These functions seem to be particularly important in learning contexts (Schwaighofer, Fischer, & Bühner, 2015). While instructional research has focused on working memory functions for two decades now (e.g. Koopmann-Holm & O’Connor, 2017), shifting and inhibition have received much less attention. Recently, shifting has been shown to moderate the effects of instructional design features in complex learning with multiple sources of information (Schwaighofer, Bühner, & Fischer, 2016). Learners with lower shifting abilities benefitted more from scaffolding with worked examples than learners with higher shifting abilities. Schwaighofer et al. (2015) put forward the hypothesis that in complex learning environments, it is the coordinated interplay of the executive functions rather than one of these abilities in isolation which is responsible for successful learning.

Also, motivational and affective factors may be key, for example interest (Rotgans & Schmidt, 2014), self-efficacy (Zimmerman, 2000) or epistemic emotions (Pekrun & Linnenbrink-Garcia, 2012). These variables have been included in studies both as moderating factors of the effect of complex learning on the development of competences as well as outcomes in their own right. However, they have hardly been deployed systematically in research on learning with simulations. Simulations for learning as well as serious games have nourished hopes for increased motivation and the growing interest of learners (Sailer, Hense, Mandl, & Klevers, 2013; Wouters, Van Nimwegen, Van Oostendorp, & Van Der Spek, 2013).

Learning through interacting with a complex and dynamic simulation differs substantially from following a well-structured lecture or reading a chapter in a textbook. It seems likely that personality traits (e.g. openness to experience, Costa & McCrae, 1985) and individual preferences, such as the tolerance of ambiguity (e.g., Hancock, Roberts, Monrouxe, & Mattick, 2015), do play a role in how individuals engage in and learn from simulations and in how they experience and benefit from additional instructional support (e.g. prompts or role-plays). Recently, relationships have been found between some personality traits, like diligence, and the development of competence (cf. Pellegrino & Hilton, 2013). But there is hardly any systematic research which has investigated the potentially moderating effects of relatively stable personality traits on the development of competences in simulation-based learning.

Consequently, our conceptual framework for guiding research on learning to diagnose with simulations systematically addresses individual learning prerequisites (Fig. 1). These entail cognitive factors (e.g. prior professional knowledge, executive functions) as well as motivational and affective factors (e.g. interest, self-efficacy, epistemic emotions). The framework also includes personality traits (e.g. openness to experience). The benefit of identifying the dependencies of instructional effects on pre-existing interindividual differences between learners is obvious; the systematic inclusion of these factors will likely yield scientific knowledge which helps answer the question of for whom specific instructional designs are likely to be effective. Scientific knowledge on the moderating effects of individual learning prerequisites can therefore contribute to making simulation-based learning environments more adaptive and personalized.

Individual differences are not the only group of moderating factors we assume in our conceptual model. The other group of potential moderators are contextual (Fig. 1) and will be introduced in the next section.

3.4.2. Contextual factors

Typically, instructional researchers are alert to differences between ill-structured and well-structured domains with respect to effective instruction (Spiro, Feltovich, Jacobson, & Coulson, 1992), but they often assume that similar instructional effects can be obtained across different domains as long as the task structures are comparable. However, it is not at all straightforward that analogical instructional effects are obtained in different diagnostic situations within and across domains. The nature of the diagnostic situation is constituted by the features apparent in the situation where the diagnosis takes place. No established classification for the nature of diagnostic situations is available to date, not even in medicine, where research on diagnosing has a longer tradition than in teaching. Heuristically diagnostic situations can differ along two dimensions, (1) interaction-based vs. document-based diagnosis and (2) individual vs. collaborative diagnosis.

1) Interaction vs. document-based. The differentiation of interaction-based and document-based diagnosis is based on the main source of information necessary for the diagnosis. In interaction-based diagnosis (e.g. in anamnestic situations with a patient or during a diagnostic interview with a student concerning arithmetic skills), this information is gathered from the interaction with the patient or student. There is a high likelihood of time pressure and little opportunity for reflection. In document-based diagnosis (e.g. diagnosis based on a patient’s laboratory findings or diagnosis of a student’s competence level based on the analysis of written assignments), the necessary information is available in written, pictorial or video format. In these situations, there is typically no time pressure, and reflection is possible. The distinction between interaction-based and document-based diagnosis is a relevant distinction from a practical point of view. Both types of situations might entail different demands, and hence, different instructional support might be necessary.

2) Individual vs. collaborative. The necessity for collaboration and communication with other professionals during the diagnostic process characterizes a second dimension of diagnostic situations. In many such situations, an individual diagnostician arrives at a diagnosis. However, there are also situations in which collaboration is necessary and routine, for example consultations of medical experts from different specialties on complex patient cases. Empirical studies provide initial evidence that the social regulation of diagnostic activities causes difficulties even for advanced practitioners (Christensen et al., 2000; Tschan et al., 2009). So far, however, there is little empirical research on the competencies necessary for such collaborations or on ways to foster them (cf. Kiesewetter, Fischer, & Fischer, 2017). In schools, collaborative diagnoses do not (yet) seem to be part of teachers’ daily routines. However, there are instances, for example with students having multiple learning difficulties in several subjects, in which teachers must collaboratively diagnose a student’s situation. Another still unexplored case of collaborative diagnosis in schools is the collaboration between teachers of different subjects in diagnosing cross-domain competencies, for example a biology and a physics teacher diagnosing students’ scientific reasoning skills based on their encounters with these students in their own lessons.

Our conceptual framework includes the diagnostic situation and the domain as contextual moderators (Fig. 1). The tasks which are considered diagnostic tasks vary greatly, even within a single domain. Regarding diagnostic situations, the conceptual framework will initially entail two heuristic dimensions along which diagnostic situations can be distinguished: interaction-based vs. document-based and individual vs. collaborative diagnosing. These two dimensions are not regarded as the only important aspects of diagnostic situations but are meant to serve as a starting point. Including them in a research framework allows the question of generalizability across types of simulated diagnostic situations (such as individual vs. collaborative diagnosis) and domains (such as medicine and teaching) to be addressed more systematically.

In the preceding sections of this article, we laid out a conceptual framework (Fig. 1) which may possibly guide joint research programmes of medical and teacher education in analysing and facilitating diagnostic competences. One primary goal of this research programme would be to advance the conceptual framework from being just a joint language to becoming a joint theory with specified relationships between its concepts. Such a research programme would first need to identify or develop and validate simulations as approximations of medical and teaching practices. Validation studies need to generate evidence that the simulation corresponds to the simulated situation, at least to a certain degree. Such a programme would also have to agree on ways to measure the different professional knowledge bases and their coordinated application as well as their engagement in diagnostic activities (e.g. evaluating evidence) and their diagnostic quality, for instance, the simulations’ accuracy (e.g., Hege, Kononowicz, Kiesewetter, & Foster-Johnson, 2018; Südkamp et al., 2012) and efficiency (e.g., Braun et al., 2017). These measurements will be functional in improving our understanding of the causal relationships of instructional support features (e.g. prompts, role-play, reflection phases) and their combination in simulation-based learning environments with the development of diagnostic competences. Causal hypotheses are that instructional support leads to qualitatively improved engagement in diagnostic activities in simulation-based learning environments and that improved engagement in diagnostic activities, in turn, leads to an advancement of diagnostic competences. To test these types of hypotheses, experimental mediation designs with measures of the diagnostic activities or sequences thereof during the learning phase seem promising. Combinations of scaffolding, with explicit presentation of information at varying points in time, enable the testing of hypotheses regarding the optimal timing for such explicit presentation of information in the context of scaffolded diagnostic activities.

Including cognitive, motivational and affective learning prerequisites in the research programme allows the assessment of how these prerequisites moderate the size or even the direction of instructional effects. For instance, the expertise reversal effect (Kalyuga, 2007), well established for example-based learning, may be tested for other forms of scaffolding. Generating more systematic scientific knowledge on the role of these prerequisites will eventually enable more adaptive designs of simulations and the instructional guidance they entail.

Regarding the contextual factors of the diagnostic situations, it seems promising to apply the same instructional approach to two or more diagnostic situations which differ markedly, to learn about how specific or general an instructional effect is. For example, the effects of reflection phases can be investigated for highly dynamic interaction-based situations and for less dynamic document-based diagnosing.

With respect to domain, it would seem an appropriate first step to use the same instructional approach in environments simulating structurally similar diagnostic situations (e.g. document-based and collaborative diagnosing) in at least two different domains. A hypotheses which can be tested is the claim that the effects of simulations and instructional guidance differ between different diagnostic situations within domains, probably even more than between the same types of diagnostic situations in different domains.

To seriously address the challenges of involving different fields such as the medical field and teacher education, a research programme also implies interdisciplinary research collaboration. In order to be able to compare the findings from different studies, the data structure needs to be consistent across corresponding research projects, and some of the variables introduced earlier need to be measured in identical or at least comparable ways (e.g. motivation, cognitive load). By using learning prerequisites, diagnostic situations and domains as potential moderators of the overall effects, meta-analytic techniques could be used to synthesize the results from several experimental studies on the effects of various approaches of instructional support on the development of diagnostic competences.

Research programmes, such as the one outlined here, will be instrumental in further advancing the conceptual framework into a theoretical account of a way of instructionally supporting the development of diagnostic competences in simulation-based learning environments. Such research programmes can also contribute to persisting issues in research on learning and instruction, such as the interaction of knowledge and reasoning; the differential effects of different types of scaffolding for differently advanced learners; the domain specificity of instructional guidance; and the role of the authenticity of learning environments in higher education for the development of competence.

The research for this article was funded by the German Research Association (Deutsche Forschungsgemeinschaft, DFG) (FOR2385)

Abs, H. J. (2007). Überlegungen zur Modellierung Diagnostischer

Kompetenz bei Lehrerinnen und Lehrern [Considerations of modelling

teachers’diagnostic competence]. In M. Lüders & J. Wissinger

(Eds.), Forschung zur Lehrerbildung: Kompetenzentwicklung

und Programmevaluation [Research on teacher education.

Competence development and program evaluation] (pp. 63–84).

Waxmann Verlag.

Ahn, J.-H. (2008). Application of the experiential learning cycle

in learning from a business simulation game. E-Learning,

5 (2), 146–156.

https://doi-org.emedien.ub.uni-muenchen.de/10.2304/elea.2008.5.2.146

Al-Kadi, A. S., & Donnon, T. (2013). Using simulation to

improve the cognitive and psychomotor skills of novice students in

advanced laparoscopic surgery: a meta-analysis. Medical

teacher, 35(sup1), 47-55. doi:

10.3109/0142159X.2013.765549

Anderson, J. R., & Lebiere, C. J. (1998). The Atomic

Components of Thought. Mahwah, NJ: Lawrence Erlbaum

Associates. doi: 10.4324/9781315805696

Barrows, H. S., & Pickell, G. C. (1992). Developing

clinical problem solving skills: A guide to more effective

diagnosis and treatment . New York, NY: WW Norton &

Co.

Bennett, R. E. (2011). Formative assessment: a critical review.Assessment

in Education: Principles, Policy & Practice, 18(1),

5–25. https://doi.org/10.1080/0969594X.2010.513678

Berliner, D. C. (2001). Learning about and learning from expert

teachers. International Journal of Educational Research,

35, 463–482. doi: 10.1016/S0883-0355(02)00004-6

Berthold, K., Nückles, M., & Renkl, A. (2007). Do learning

protocols support learning strategies and outcomes? The role of

cognitive and metacognitive prompts. Learning and Instruction,

17(5), 564–577.

https://doi.org/10.1016/j.learninstruc.2007.09.007

Bisra, K., Liu, Q., Nesbit, J. C., Salimi, F., & Winne, P. H.

(2018). Inducing Self-Explanation: a Meta-Analysis. Educational

Psychology Review, 1-23. doi:10.1007/s10648-018-9434-x

Bokken, L., Linssen, T., Scherpbier, A., van der Vleuten, C.,

& Rethans, J.-J. (2009). Feedback by simulated patients in

undergraduate medical education: A systematic review of the

literature. Medical Education, 43(3), 202–210.

https://doi.org/10.1111/j.1365-2923.2008.03268.x

Borko, H., Roberts, S. A., & Shavelson, R. (2008). Teachers’

Decision Making: from Alan J. Bishop to Today. In P. Clarkson

& N. Presmeg (Eds.), Critical Issues in Mathematics

Education (1. Aufl. ed., pp. 37–67). s.l.: Springer-Verlag.

Boshuizen, H. P. A., & Schmidt, H. (1992). On the role of

biomedical knowledge in clinical reasoning by experts,

intermediates and novices. Cognitive Science, 16(2),

153–184. https://doi.org/10.1016/0364-0213(92)90022-M

Boshuizen, H. P. A., & Schmidt, H. (2008). The development of

clinical reasoning expertise. In J. Higgs, M. A. Jones, S. Loftus,

& N. Christensen (Eds.), Clinical reasoning in the health

professions (3rd ed., pp. 113–122). Amsterdam: Elsevier

(Butterworth Heinemann).

Boshuizen, H. P. A., Schmidt, H., Custers, E., & Van de Wiel,

M. (1995). Knowledge development and restructuring in the domain

of medicine: The role of theory and practice. Learning and

Instruction, 5(4), 269–289.

https://doi.org/10.1016/0959-4752(95)00019-4

Braun, L. T., Zottmann, J. M., Adolf, C., Lottspeich, C., Then,

C., Wirth, S., ... & Schmidmaier, R. (2017). Representation

scaffolds improve diagnostic efficiency in medical students. Medical

education, 51(11), 1118-1126. doi:

10.1111/medu.13355

Charlin, B., Boshuizen, H. P. A., Custers, E. J., & Feltovich,

P. J. (2007). Scripts and clinical reasoning. Medical

Education, 41(12), 1178–1184. doi:

10.1111/j.1365-2923.2007.02924.x

Christensen, C., Larson, J. R., Jr, Abbott, A., Ardolino, A.,

Franz, T., & Pfeiffer, C. (2000). Decision making of clinical

teams: communication patterns and diagnostic error. Medical

Decision Making: An International Journal of the Society for

Medical Decision Making , 20(1), 45–50. doi:

10.1177/0272989X0002000106

Cook, D. A. (2014). How much evidence does it take? A cumulative

meta-analysis of outcomes of simulation-based education. Medical

Education, 48(8), 750–760.

https://doi.org/10.1111/medu.12473

Cook, D. A., Brydges, R., Hamstra, S. J., Zendejas, B., Szostek,

J. H., Wang, A. T., … Hatala, R. (2012). Comparative effectiveness

of technology-enhanced simulation versus other instructional

methods: a systematic review and meta-analysis. Simulation in

Healthcare, 7(5), 308–320. doi:

10.1097/SIH.0b013e3182614f95

Cook, D. A., Hamstra, S. J., Brydges, R., Zendejas, B., Szostek,

J. H., Wang, A. T., … Hatala, R. (2013). Comparative effectiveness

of instructional design features in simulation-based education:

Systematic review and meta-analysis. Medical Teacher,

35(1), 867-898. doi: 10.3109/0142159X.2012.714886

Costa, P. T., & McCrae, R. R. (1985). The NEO personality

inventory. Journal of Career Assessment, 3(2),

123–129.

Croft, P., Altman, D. G., Deeks, J. J., Dunn, K. M., Hay, A. D.,

Hemingway, H., ... & Riley, R. D. (2015). The science of

clinical practice: disease diagnosis or patient prognosis?

Evidence about “what is likely to happen” should shape clinical

practice. BMC medicine, 13, 1-8. doi:

10.1186/s12916-014-0265-4

De Coninck, K., Valcke, M., Ophalvens, I., & Vanderlinde, R.

(2019). Bridging the theory-practice gap in teacher education: The

design and construction of simulation-based learning environments.

In K. Hellmann, J. Kreutz, M. Schwichow, & K. Zaki (Eds.), Kohärenz

in der Lehrerbildung (pp. 263-280). Wiesbaden: Springer VS.

diagnosing. (n.d.) In Cambridge dictionary online.

Retrieved from

https://dictionary.cambridge.org/dictionary/english/diagnosing

. Retrieved 11:24, May 25, 2019

de Jong, T. (2006). Technological advances in inquiry learning. Science,

312(5773), 532–533.

https://doi.org/10.1126/science.1127750

de Jong, T., Hendrikse, P., & Meij, H. van der. (2010).

Learning mathematics through inquiry: A large-scale evaluation. In

M. J. Jacobson & P. Reimann (Eds.), Designs for Learning

Environments of the Future (pp. 189–203). Springer US.

Retrieved from

http://link.springer.com/chapter/10.1007/978-0-387-88279-6_7

Dotger, B. H. (2013). I Had No Idea: Clinical Simulations for

Teacher Development. Information Age Publishing Inc.

Ericsson, K. A. (2004). Deliberate practice and the acquisition

and maintenance of expert performance in medicine and related

domains. Academic Medicine, 79(10), 70–81.

doi: 10.1097/00001888-200410001-00022

Fischer, F., Kollar, I., Stegmann, K., & Wecker, C. (2013).

Toward a script theory of guidance in computer-supported

collaborative learning. Educational Psychologist, 48(1),

56–66. doi: 10.1080/00461520.2012.748005

Fischer, F., Kollar, I., Ufer, S., Sodian, B., Hussmann, H.,

Pekrun, R., … Eberle, J. (2014). Scientific reasoning and

argumentation: Advancing an interdisciplinary research agenda in

education. Frontline Learning Research, 2(2),

28–45. https://doi.org/10.14786/flr.v2i2.96

Förtsch, C., Sommerhoff, D., Fischer, F., Fischer, M., Girwidz,

R., Obersteiner, A., ... & Seidel, T. (2018). Systematizing

Professional Knowledge of Medical Doctors and Teachers:

Development of an Interdisciplinary Framework in the Context of

Diagnostic Competences. Education Sciences, 8(4),

207. doi: 10.3390/educsci8040207

Frasson, C., & Blanchard, E. (2012). Simulation-based

learning. In N. Seel, Encyclopedia of the Sciences of

Learning (pp. 3076–3080). Boston: Springer.

Gartmeier, M., Bauer, J., Fischer, M. R., Karsten, G., &

Prenzel, M. (2011). Modellierung und Assessment professioneller

Gesprächsführungskompetenz von Lehrpersonen im

Lehrer-Elterngespräch [Modeling and assessment of teachers'

professional competence for parent-teacher conversations]. In O.

Zlatkin-Troitschanskaja (Ed.). Stationen Empirischer

Bildungsforschung (pp. 412-424). VS Verlag für

Sozialwissenschaften. doi: 10.1007/978-3-531-94025-0_29

Gartmeier, M., Bauer, J., Fischer, M. R., Hoppe-Seyler, T.,

Karsten, G., Kiessling, C., … Prenzel, M. (2015). Fostering

professional communication skills of future physicians and

teachers: effects of e-learning with video cases and role-play. Instructional

Science, 43(4), 443–462.

https://doi.org/10.1007/s11251-014-9341-6

Gaudin, C., & Chaliès, S. (2015). Video viewing in teacher

education and professional development: A literature review. Educational

Research Review, 16, 41-67.

doi:10.1016/j.edurev.2015.06.001

Gerard, L., Matuk, C., McElhaney, K., & Linn, M. C. (2015).

Automated, adaptive guidance for K-12 education. [Review]. Educational

Research Review, 15, 41-58. doi:

10.1016/j.edurev.2015.04.001

Glogger-Frey, I., Herppich, S., & Seidel, T. (2018). Linking

teachers’ professional knowledge and teachers’ actions: Judgment

processes, judgments and training. Teaching and Teacher

Education, 76(1), 176-180. doi:10.1016/j.tate.2018.08.00

Goeze, A., Zottmann, J. M., Vogel, F., Fischer, F., &

Schrader, J. (2014). Getting immersed in teacher and student

perspectives? Facilitating analytical competence using video cases

in teacher education. Instructional Science, 42(1),

91–114. doi: 10.1007/s11251-013-9304-3

Gräsel, C. (1997). Wir können auch anders: Problemorientiertes

Lernen an der Hochschule [We can also do things differently:

Problem-oriented learning in higher education]. In H. Gruber &

A. Renkl (Eds.), Wege zum Können. Determinanten des

Kompetenzerwerbs. Bern: Huber.

Gräsel, C., & Böhmer, I. (2013). Die Übergangsempfehlung nach

der Grundschule. Welche Informationen nutzen Lehrerinnen und

Lehrer für die Entscheidung? [School tracking decisions after

primary school: Which information do teachers use for their

decision?]. In N. McElvany & H. G. Holtappels (Eds.),

Empirische Bildungsforschung. Theorien, Methoden, Befunde und

Perspektiven. Festschrift für Wilfried Bos (pp. 235–248).

Münster u.a.: Waxmann.

Grossman, P. (2010). Learning to practice: The design of

clinical experience in teacher preparation (policy

brief) . Washington, DC: American Association of Colleges

for Teacher Education and National Education Association.

Grossman, P., & McDonald, M. (2008). Back to the future:

Directions for research in teaching and teacher education. American

Educational Research Journal, 45(1), 184-205.

doi:10.3102/0002831207312906

Grossman, P., Compton, C., Igra, D., Ronfeldt, M., Shahan, E.,

& Williamson, P. (2009). Teaching practice: A

cross-professional perspective. Teachers College Record,