Digital Dashboards for Summative Assessment and Indicators Misinterpretation: A Case Study

DOI:

https://doi.org/10.53967/cje-rce.5269Keywords:

dashboard, learning analytics, skill evaluation, case studyAbstract

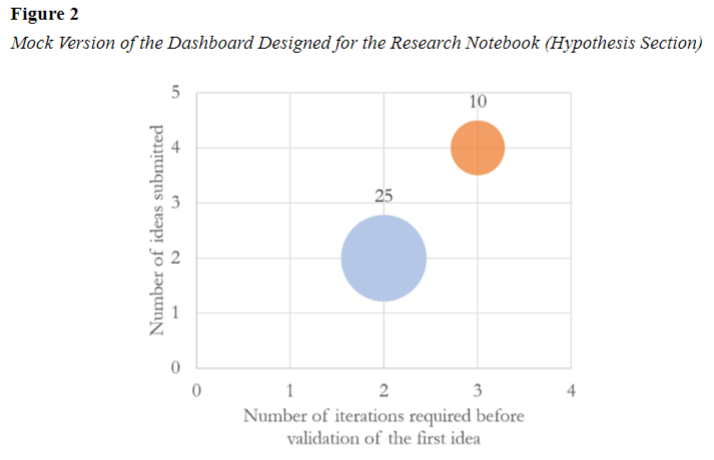

Over the last decade, teachers in France have been increasingly pressured to use digital learning environments, and to shift from grade-based to skill-based assessment. Educational dashboards, which measure student input electronically, could foster such a transition by providing insights into learners’ performances. However, such dashboards could also foster data misinterpretation during the summative assessment process, should the indicators that they display be used without a proper understanding of what they reflect. This article presents a methodology to detect potential mistakes in the interpretation of the indicators in the context of inquiry-based learning. During the design of a learning environment, we analyzed, through analytics and classroom observations in primary and middle schools, the issues that could arise from the use of a dashboard. Our data suggest that the amount of information practitioners needed to collect to make indicators relevant was burdensome, making the dashboard unfit for assessment purposes at the scale of a classroom.

Metrics

References

Abd-El-Khalick, F., BouJaoude, S., Duschl, R., Lederman, N. G., Mamlok-Naaman, R., Hofstein, A., & Tuan, H. (2004). Inquiry in science education: International perspectives. Science Education, 88(3), 397–419. https://doi.org/10.1002/sce.10118

Aguilar, S. J., Karabenick, S. A., Teasley, S. D., & Baek, C. (2021). Associations between learning analytics dashboard exposure and motivation and self-regulated learning. Computers & Education, 162, 104085. https://doi.org/10.1016/j.compedu.2020.104085

Ali, L., Hatala, M., Gašević, D., & Jovanović, J. (2012). A qualitative evaluation of evolution of a learning analytics tool. Computers & Education, 58(1), 470–489. https://doi.org/10.1016/j.compedu.2011.08.030

Baker, R. S. (2011). Gaming the system: A retrospective look. Philippine Computing Journal, 6(2), 9–13.

Baker, R. S., Corbett, A. T., & Koedinger, K. R. (2004) Detecting student misuse of intelligent tutoring systems. In C. J. Lester, R. M. Vicari, & F. Paraguaçu (Eds.), Proceedings of the 7th International Conference on Intelligent Tutoring Systems (pp. 531–540). Springer.

Baker, R. S., Walonoski, J., Heffernan, N., Roll, I., Corbett, A., & Koedinger, K. (2008). Why students engage in “gaming the system” behavior in interactive learning environments. Journal of Interactive Learning Research, 19(2), 185–224. https://www.learntechlib.org/primary/p/24328/

Bulletin Officiel de l’Education Nationale. (2007). Livret Personnel de Compétences [Personal Skills Gradebook]. Bulletin officiel n° 22. https://www.education.gouv.fr/bo/2007/22/MENE0754101D.htm

Bulletin Officiel de l’Education Nationale. (2016). Évaluation des acquis scolaires des élèves et livret scolaire, à l’école et au collège [Assessment of learners’ skills and gradebooks for elementary and middle schools]. Bulletin officiel n° 3.

Bywater, J. P., Chiu, J. L., Hong, J., & Sankaranarayanan, V. (2019). The teacher responding tool: Scaffolding the teacher practice of responding to student ideas in mathematics classrooms. Computers & Education, 139(1), 16–30. https://doi.org/10.1016/j.compedu.2019.05.004

Cisel, M., & Barbier, C. (2021a). Mentoring teachers in the context of student-question-based inquiry: The challenges of the Savanturiers programme. International Journal of Science Education, 43(17), 2729–2745. https://doi.org/10.1080/09500693.2021.1986240

Cisel, M., & Barbier, C. (2021b). Instrumentation numérique de la rédaction incrémentale : leçons tirées de la mise à l’épreuve du carnet numérique de l’élève chercheur [Iterative writing and digital technologies: Lessons drawn from an experimentation with the CNEC]. Canadian Journal of Education/Revue canadienne de l’éducation, 44(2), 277–307. https://doi.org/10.53967/cje-rce.v44i2.4445

Cisel, M., & Baron, G. L. (2019). Utilisation de tableaux de bord numériques pour l’évaluation des compétences scolaires : une étude de cas [Using Digital Dashboards in the context of skill-based assessments in primary and secondary education: A case study]. Questions Vives. Recherches en éducation, (31). https://journals.openedition.org/questionsvives/3883

Eurydice. (2012). Developing key competences at school in Europe: Challenges and opportunities for policy. Publications Office of the European Union. https://eacea.ec.europa.eu/national-policies/eurydice/content/developing-key-competences-school-europe-challenges-and-opportunities-policy_en

Faber, J. M., Luyten, H., & Visscher, A. J. (2017). The effects of a digital formative assessment tool on mathematics achievement and student motivation: Results of a randomized experiment. Computers & Education, 106, 83–96. https:/doi.org/10.1016/j.compedu.2016.12.001

Gobert, J. D., Pedro, M. S., Raziuddin, J., & Baker, R. S. (2013). From log files to assessment metrics: Measuring students’ science inquiry skills using educational data mining. Journal of the Learning Sciences, 22(4), 521–563.

Han, J., Kim, K. H., Rhee, W., & Cho, Y. H. (2021). Learning analytics dashboards for adaptive support in face-to-face collaborative argumentation. Computers & Education, 163, 104041. https://doi.org/10.1016/j.compedu.2020.104041

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81–112. https://doi.org/10.3102/003465430298487

Herranen, J., & Aksela, M. (2019). Student-question-based inquiry in science education. Studies in Science Education, 55(1), 1–36. https://doi.org/10.1080/03057267.2019.1658059

Hu, Y.-H., Lo, C.-L., & Shih, S.-P. (2014). Developing early warning systems to predict students’ online learning performance. Computers in Human Behavior, 36, 469–478. https://doi.org/10.1016/j.chb.2014.04.002

Iandoli, L., Quinto, I., De Liddo, A., & Buckingham Shum, S. (2014). Socially augmented argumentation tools: Rationale, design and evaluation of a debate dashboard. International Journal of Human-Computer Studies, 72(3), 298–319. https://doi.org/10.1016/j.ijhcs.2013.08.006

Kluger, A. N., & DeNisi, A. (1996). The effects of feedback interventions on performance: A historical review, a meta-analysis, and a preliminary feedback intervention theory. Psychological Bulletin, 119(2), 254–284. https://doi.org/10.1037/0033-2909.119.2.254

Krueger, R. A. (2014). Focus groups: A practical guide for applied research. SAGE.

Longo, C. (2010). Fostering creativity or teaching to the test? Implications of state testing on the delivery of science instruction. The Clearing House, 83(2), 54–57.

McMullan, M., Endacott, R., Gray, M. A., Jasper, M., Miller, C. M. L., Scholes, J., & Webb, C. (2003). Portfolios and assessment of competence: A review of the literature. Journal of Advanced Nursing, 41(3), 283–294. https://doi.org/10.1046/j.1365-2648.2003.02528.x

Mottus, A., Graf, S., & Chen, N.-S. (2015). Use of dashboards and visualization techniques to support teacher decision making. In T. Kinshuk & R. Huang (Eds.), Ubiquitous learning environments and technologies (pp. 181–199). Springer Berlin Heidelberg.

Nielsen, J. (1993). Usability engineering. Morgan Kaufmann Publishers.

Pedaste, M., Mäeots, M., Siiman, L. A., de Jong, T., van Riesen, S. A. N., Kamp, E. T., & Tsourlidaki, E. (2015). Phases of inquiry-based learning: Definitions and the inquiry cycle. Educational Research Review, 14, 47–61. https://doi.org/10.1016/j.edurev.2015.02.003

Phillips, R., Maor, D., Cumming-Potvin, W., Roberts, P., Herrington, J., Preston, G., & Perry, L. (2011, December 4–7). Learning analytics and study behavior: A pilot study [Conference presentation]. ASCILITE 2011 Conference, Tasmania. https://researchrepository.murdoch.edu.au/id/eprint/6751/

Quintana, C., Zhang, M., & Krajcik, J. (2005). A framework for supporting metacognitive aspects of online inquiry through software-based scaffolding. Educational Psychologist, 40(4), 235–244. https://doi.org/10.1207/s15326985ep4004_5

R Core Team. (2020). R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/

Sanchez, E., Young, S., & Jouneau-Sion, C. (2017). Classcraft: From gamification to ludicization of classroom management. Education and Information Technologies, 22(2), 497–513. https://doi.org/10.1007/s10639-016-9489-6

Scardamalia, M., & Bereiter, C. (2006). Knowledge building: Theory, pedagogy, and technology. In K. Sawyer (Ed.), Cambridge handbook of the learning sciences (pp. 97–118). Cambridge University Press.

Scheffel, M., Drachsler, H., Toisoul, C., Ternier, S., & Specht, M. (2017). The proof of the pudding: Examining validity and reliability of the evaluation framework for learning analytics. In É. Lavoué, H. Drachsler, K. Verbert, J. Broisin, & M. Pérez-Sanagustín (Eds.), Data driven approaches in digital education (pp. 194–208). Springer.

Schwendimann, B. A., Rodriguez Triana, M. J., Prieto Santos, L. P., Shirvani Boroujeni, M., Holzer, A. C., & Gillet, D. (2016). Understanding learning at a glance: An overview of learning dashboard studies. In LAK ’16: Proceedings of the Sixth International Conference on Learning Analytics & Knowledge (pp. 532–533). Association for Computing Machinery.

Sedrakyan, G., Malmberg, J., Verbert, K., Järvelä, S., & Kirschner, P. A. (2018). Linking learning behavior analytics and learning science concepts: Designing a learning analytics dashboard for feedback to support learning regulation. Computers in Human Behavior, 107, 105512. https://doi.org/10.1016/j.chb.2018.05.004

Silvola, A., Näykki, P., Kaveri, A., & Muukkonen, H. (2021). Expectations for supporting student engagement with learning analytics: An academic path perspective. Computers & Education, 168, 104192. https://doi.org/10.1016/j.compedu.2021.104192

Specht, M., Bedek, M., Duval, E., Held, P., Okada, A., & Stefanov, K. (2013). WESPOT: Inquiry based learning meets learning analytics. In Proceeding: The Third International Conference on e-Learning (pp. 15–20). Belgrade Metropolitan University. http://oro.open.ac.uk/42569/

Stephens-Martinez, K., Hearst, M. A., & Fox, A. (2014). Monitoring MOOCs: Which information sources do instructors value? In M. Sahamani (Ed.), Proceedings of the First ACM Conference on Learning @ Scale Conference (pp. 79–88). ACM. https://doi.org/10.1145/2556325.2566246

UNESCO. (2007). Curriculum change and competency-based approaches: A worldwide perspective. UNESCO Publications. http://www.ibe.unesco.org/en/services/online-materials/publications/recent-publications/single-view/news/curriculum-change-and-competency-based-approaches-a-worldwide-perspective-prospects-n-142/2842/next/4.html

Vardi, I. (2012). The impact of iterative writing and feedback on the characteristics of tertiary students’ written texts. Teaching in Higher Education, 17(2), 167–179. https://doi.org/10.1080/13562517.2011.611865

Verbert, K., Govaerts, S., Duval, E., Santos, J., Van Assche, F., & Parra, G. (2014). Learning dashboards: An overview and future research opportunities. Personal and Ubiquitous Computing, 18(6), 1499–1514. https://doi.org/10.1007/s00779-013-0751-22

Wang, D., & Han, H. (2021). Applying learning analytics dashboards based on process‐oriented feedback to improve students’ learning effectiveness. Journal of Computer Assisted Learning, 37(2), 487–499.

Xhakaj, F., Aleven, V., & McLaren, B. M. (2016). How teachers use data to help students learn: Contextual inquiry for the design of a dashboard. In K. Verbert, M. Sharples, & T. Klobučar (Eds.), Adaptive and adaptable learning (pp. 340–354). Springer. https://doi.org/10.1007/978-3-319-45153-4_26

Zhao, K., & Chan, C. K. K. (2014). Fostering collective and individual learning through knowledge building. International Journal of Computer-Supported Collaborative Learning, 9(1), 63–95. https://doi.org/10.1007/s11412-013-9188-x

Downloads

Published

How to Cite

Issue

Section

License

Copyright (c) 2022 Canadian Society for the Study of Education

This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License.

The Canadian Journal of Education follows Creative Commons Licencing CC BY-NC-ND.